- Table of Contents

- 1. Introduction

- 2. Requirements

- 3. Recommendations

- 4. Quick Start

- 5. Administering Inca with incat

- 5.1. Repositories

- 5.2. Resource Configuration

- 5.3. Suites

- 5.4. Committing/Saving

- 5.5. Resource Access Methods

- 5.6. Requiring Resource Administrator Approval

- 5.7. Proxies

- 5.8. Suspend Execution

- 5.9. Using Resource Macros

- 5.10. Series Comparison and Notification

- 6. Data Display (Data Consumers)

- 6.1. Current Data

- 6.1.1. Tabular Suite Summary View

- 6.1.2. Map View using Google Map API

- 6.2. Reports

- 6.2.1. Pass/Fail Graphs and Error Messages

- 6.2.2. Metric Reports

- 6.2.3. Average Pass Rate by Resource/Suite

- 6.2.4. Series Error Summary

- 6.2.5. Average Pass History

- 6.3. Query

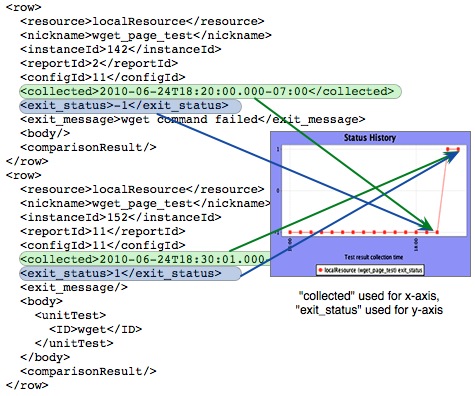

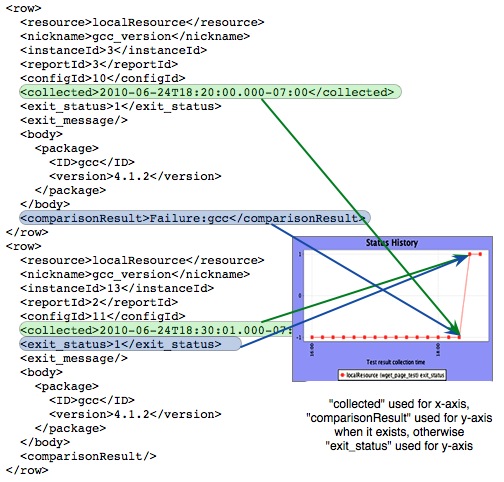

- 6.3.1. Create Graph

- 6.3.2. Create Stored Query

- 6.3.3. Manage Stored Queries

- 6.4. Admin

- 6.4.1. List Running Reporters

- 6.4.2. View/Change Configuration

- 6.5. Report Details

- 6.5.1. Run Nows

- 6.5.2. Knowledge Base

- 6.6. Report Summaries

- 6.7. Description of Data Consumer Files

- 6.8. REST URLs

- 6.9. Changing the Look

- 6.9.1. Colors and Fonts

- 6.9.2. HTML Layout

- 6.9.3. Custom Page Header

- 6.10. Displaying Errors Neutrally

- 6.11. Changing the Installation Location

- 6.12. Changing Ports

- 6.13. Disabling HTTP

- 6.14. Changing HTTPS credential

- 6.15. Adding Password Protection

- 7. Writing Custom Data Consumers

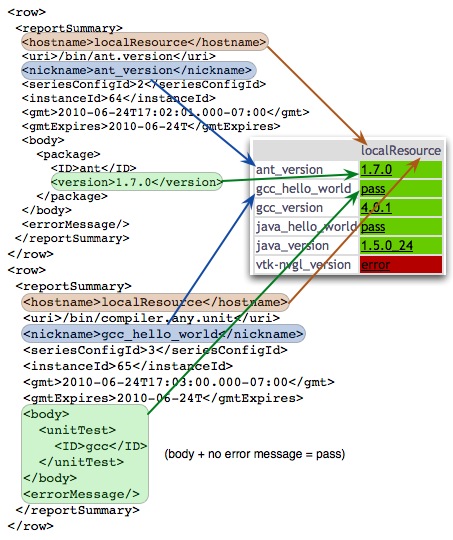

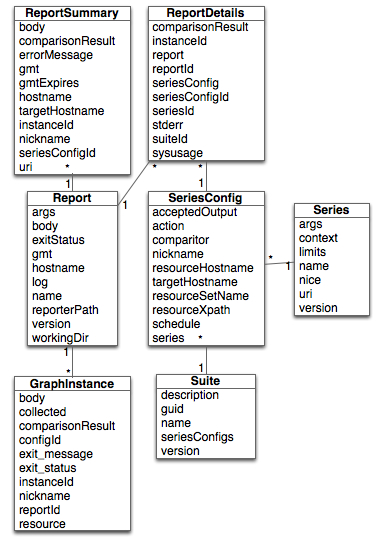

- 7.1. Understanding Inca XML Data

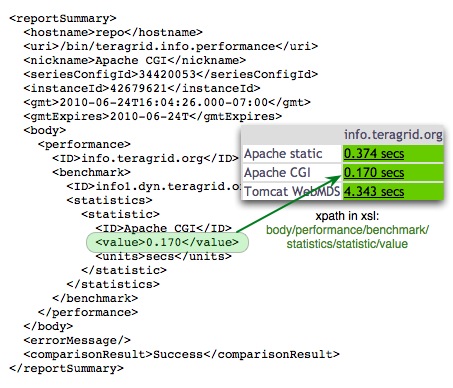

- 7.1.1. Report Summaries

- 7.1.2. Graph Instances

- 7.1.3. Report Details

- 7.2. Retrieving Data

- 7.2.1. REST APIs

- 7.2.2. Web Services API

- 7.2.3. Inca Client APIs

- 8. Reporters and Repositories

- 8.1. Executing Reporters

- 8.2. Writing Reporters

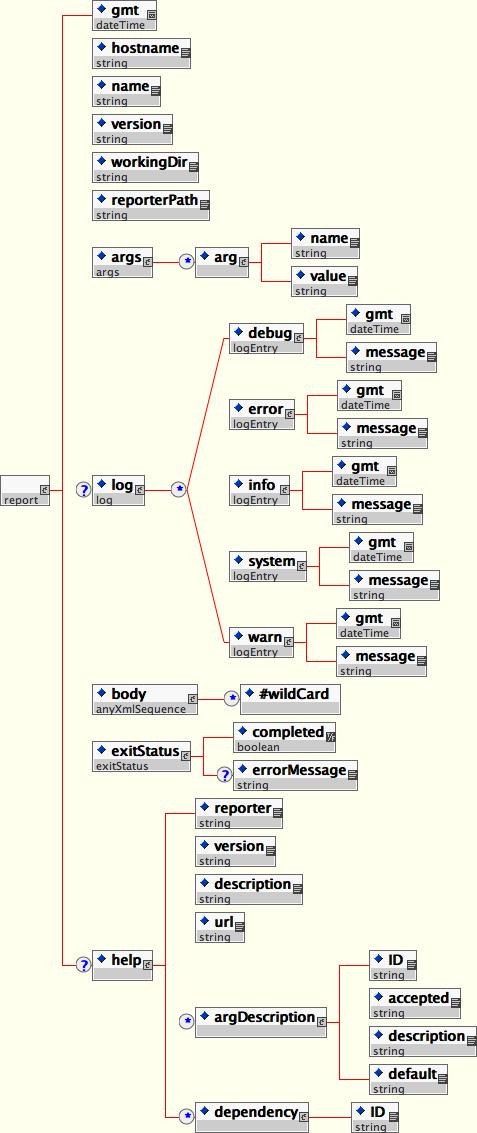

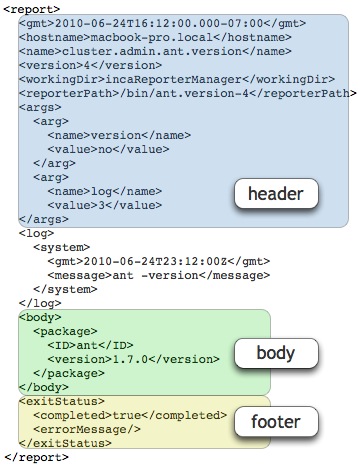

- 8.2.1. Reporter XML Schema

- 8.2.2. Reporter XML Output

- 8.2.3. Reporter APIs

- 8.2.4. Reporter Writing Tips

- 8.3. Reporter Repositories

- 9. Web Services

- 10. Security

- 11. Advanced Configuration

- 11.1. Inca Component Options

- 11.2. Customizing Series Notification

- 11.3. Report Filtering

- 11.3.1. Downtime Filter

- 11.3.2. All2All Filter

- 11.3.3. Multiple Filters

- 11.4. Depot Database Configuration

- 11.5. Depot Fault Tolerance

- 11.6. Manual Access Method

- 11.7. Batch Systems

- 11.8. Manual Run Now

- 11.9. Source Distributions

- 12. Component Man Pages

- 12.1. Depot

- 12.2. Agent

- 12.3. Reporter Manager

- 12.4. Consumer

- 12.5. Incat

- 12.6. Inca Web Services

- 13. Troubleshooting

- 13.1. Log Files

- 13.2. Authentication Problems

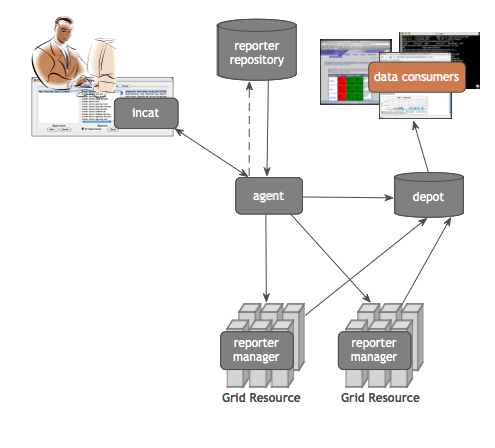

1. Introduction

Determining whether a Grid is up in the face of complex Grid software deployments can be difficult and depends on the types of applications and users that utilize it. By detailing a set of software, services, and features that should be available on a Grid in a machine-readable format, a Grid can be tested periodically by an automated system to verify its health and usability to users. To this end, we have developed Inca as a flexible framework to perform periodic, user-level functionality testing and performance measurement of Grid systems. It includes mechanisms to schedule the execution of information gathering scripts, and to collect, archive, publish, and display data

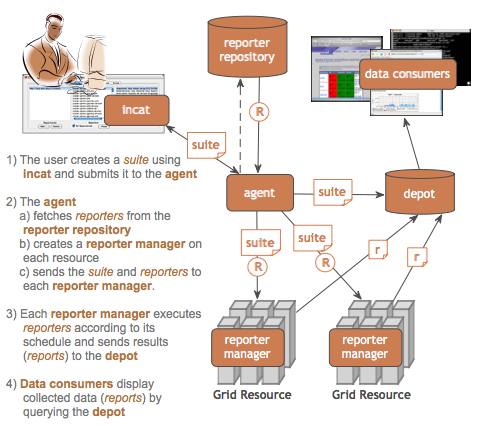

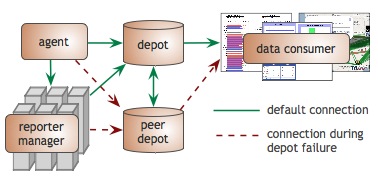

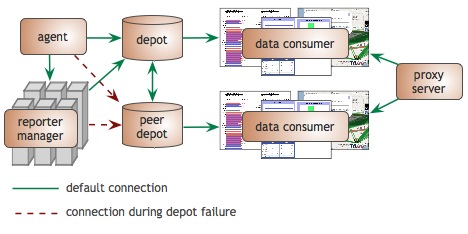

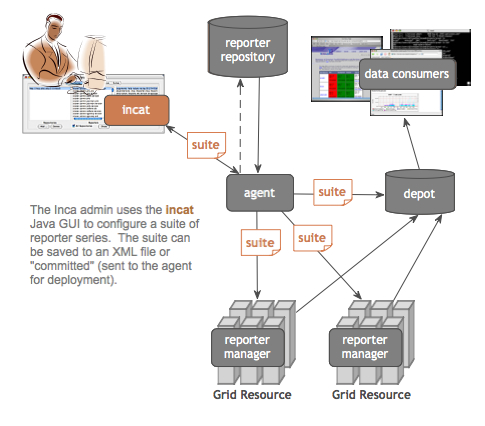

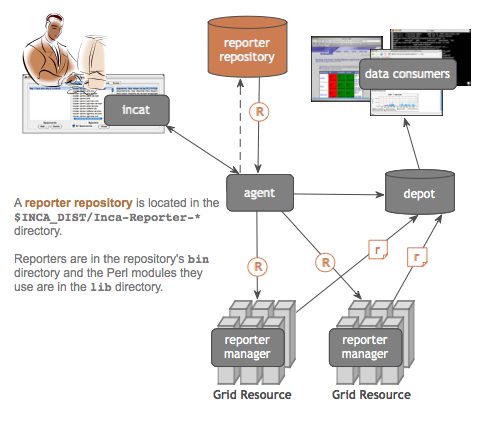

The architecture of Inca and a description of its components is shown in the below figures.

| A reporter is an executable program that tests or measures some aspect of the system or installed software. |

| A report is the output of a reporter and is a XML document complying to the reporter schema in Section 8.2.1. |

| A suite specifies a set of reporters to execute on selected resources, their configuration, and frequency of execution. |

| A reporter repository contains a collection of reporters and is available via an URL. |

| A reporter manager is responsible for managing the schedule and execution of reporters on a single resource. |

| A agent is a server that implements the configuration specified by the Inca Administrator. |

| incat is a GUI used by the Inca administrator to control and configure the Inca deployment on a set of resources. |

| A depot is a server that is responsible for storing the data produced by reporters. |

| A data consumer is typically a web page client that queries a depot for data and displays it in a user-friendly format. |

2. Requirements

The Inca server components have the following requirements:

Sun JDK or JRE 1.4.2_09 or greater. When run with Java 1.4, the memory usage of Inca components sometimes grows significantly over time. This appears to be a problem in the Java run-time that is fixed in Sun Java 1.5 and 1.6, so use those versions if possible.

Perl 5.8.6 or greater

OpenSSL-0.9.6[jkl] or OpenSSL-0.9.7b or greater

GNU tar (i.e., no limit on filename length)

Inca clients (reporter managers) running on *nix resources should have:

Perl 5.8.x or greater

OpenSSL-0.9.6[jkl] or OpenSSL-0.9.7b or greater

GNU tar (i.e., no limit on filename length)

make or gmake

a C compiler

Inca clients (reporter managers) running on Windows resources should have cygwin installed with the following modules (tested on XP):

ssh server

perl

make

gcc

openssl & openssl-dev

vim (not required for reporter manager but generally useful)

3. Recommendations

We recommend that Inca be run under a regular user account and not as root for the following reasons:

To best detect user-level problems, Inca should be run under a regular user account with the default environment setup.

Inca does not require any special privileges to run.

Furthermore, we recommend that a valid GSI credential be obtained for this regular user account so that tests of Grid software requiring proxy certificates can be executed. Please request a GSI credential from your virtual organization's Certificate Authority (CA) and consult your organization's security policy regarding GSI credential use. Section 5.7 describes using proxy credentials in the Inca framework.

4. Quick Start

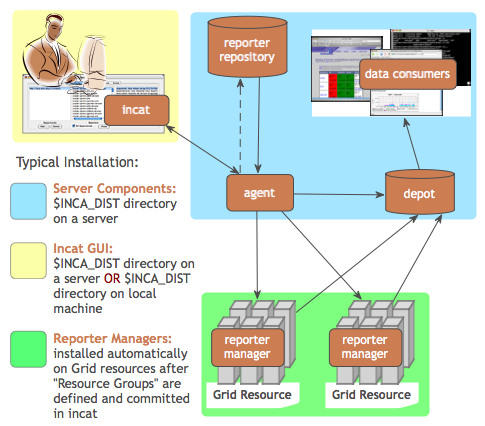

This section describes how to download, install and verify the Inca 2.6 binary release. The figure below represents a typical installation.

| Step 1: Download the installer script |

| Step 2: Run the installer script |

| Step 3: Change to the Inca installation directory |

| Step 4: Create credentials for Inca components |

| Step 5: Start up Inca components with a sample default configuration |

| Step 6: View the Inca web server pages |

| Step 7: View the sample default configuration using the Inca GUI tool |

Download the incaInstall.sh script:

% wget http://inca.sdsc.edu/releases/2.6/incaInstall.sh

Execute the install script to download the binary distribution from our website and unpack it into an installation directory. The installation directory is represented by the $INCA_DIST environment variable - it may be useful to set this variable now.

% sh incaInstall.sh $INCA_DIST core

$INCA_DIST is the location of the directory where you want to install Inca. You should see something like:

Retrieving http://inca.sdsc.edu/releases/current/ inca-common-java-bin.tar.gz --12:49:38-- http://inca.sdsc.edu/releases/current/ inca-common-java-bin.tar.gz => `inca-common-java-bin.tar.gz' Resolving inca.sdsc.edu... 198.202.75.28 Connecting to inca.sdsc.edu|198.202.75.28|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 5,921,461 [application/x-tar] 100%[======================>] 5,921,461 1.23M/s ETA 00:00 12:49:43 (1.18 MB/s) - `inca-common-java-bin.tar.gz' saved [5921461/5921461] ... common-java installed ... agent installed ... consumers installed ... depot installed ... incat installed

Change to the top level directory of your Inca installation:

% cd $INCA_DIST

Create certificates for the Inca components (enables secure component communication):

% ./bin/inca createauth

NOTE: the default life of 2.6 certificates is 5 years (1 year in earlier releases). To replace expired certificates, execute "bin/inca createauth" again to create new certificates and "bin/inca agent -u <resource group> -logfile var/upgrade.log" to update the certificates on the reporter managers.

Output from "bin/inca createauth" looks similar to the text below. When completed, you should see a certificate/key created for each of the four Inca components and stored in $INCA_DIST/etc:

password> (choose a password for your Inca installation administration) Confirm password> (reenter the same password) Generating a 512 bit RSA private key .++++++++++++ writing new private key to 'clientx.sdsc.edukey.pem' ----- agent Generating a 512 bit RSA private key .++++++++++++ writing new private key to 'agentkey.pem' ... writing new private key to 'consumerkey.pem' ... writing new private key to 'depotkey.pem' ... writing new private key to 'incatkey.pem' ...

Start the Inca server components and deploy the sample default configuration (NOTE: this command only needs to be executed ONCE. The components started in this step can later be stopped with "./bin/inca stop all" and started with "./bin/inca start all". A restart command is also available). The Inca server components are agent, depot, and consumer and will be started on ports 6323, 6324, and 8080 respectively. Please edit the inca.properties file in etc/common to change the default ports for the agent and depot. For the consumer, the port number can be customized in etc/jetty.xml. More information can be found in Section 11.

% ./bin/inca default

You should see something like:

password> (enter password from the last step) Preparing to deploy default Inca configuration... Initializing Inca configuration... ** Warning: this will erase any previously collected reporter state on the Inca depot and configuration on the agent Do you wish to continue (y/n)? y Initializing depot... Initializing c3p0 pool... com.mchange.v2.c3p0.PoolBackedDataSource@90.... Database Initialization Completed done Initializing agent done Started Inca agent Started Inca consumer Started Inca depot Sleeping for 20 seconds while the components come online Deploying default configurationDuring this step:

three server components are started on localhost:

% ps | grep java 3527 p1 S 0:14.21 /usr/bin/java -Xmx256m edu.sdsc.inca.Agent -l agent.log 3560 p1 S 0:17.63 /usr/bin/java -Xmx256m edu.sdsc.inca.Depot -l depot.log 3593 p1 S 0:15.43 /usr/bin/java -Xmx256m edu.sdsc.inca.Consumer consumer.log

a sample test suite called sampleSuite is sent to the agent. The sample suite contains a schedule for executing each of the following reporters every ten minutes:

cluster.admin.ant.unit (ant_helloworld_compile_test) cluster.admin.ant.version (ant_version) cluster.compiler.any.unit (gcc_hello_world) cluster.compiler.gcc.version (gcc_version) cluster.compiler.any.unit (java_hello_world) cluster.java.sun.version (java_version) cluster.interactive_access.openssh.version (openssh_version) cluster.security.openssl.version (openssl_version) viz.lib.vtk-nvgl.version (vtk-nvgl_version) grid.wget.unit (wget_page_test)

a client component is started on localhost:

% ps | grep Manager 5382 p1 S 0:02.14 /usr/bin/perl reporter-manager -d incas://client64-236.sdsc.edu:6324 -c etc/rmcert.The agent receives sampleSuite suite and installs a reporter manager on the localhost in ~/incaReporterManager (takes 1-5 minutes - view progress of build in ~/incaReporterManager/build.log)

After the reporter manager is built, it registers itself with the agent. The agent will send the reporter manager the set of reporters, libraries, and execution schedule.

The reporter manager executes reporters based on the execution schedule and sends reports to the depot.

To view results and verify that your Inca installation is working correctly, open the URL below in a browser. Starting a web server is not required, but you may need to replace "localhost" with the full hostname of your machine.

http://localhost:8080

You should see a start up screen similar to the figure below initially indicating an empty Inca configuration.

After a few minutes (when the consumer cache is refreshed), reload the page and you should see a start up screen similar to the figure below showing one suite called sampleSuite (our default sample configuration), a resource group called defaultGrid, a resource group called localSite, and one resource called localResource which is a nickname for the machine you installed an Inca reporter manager on.

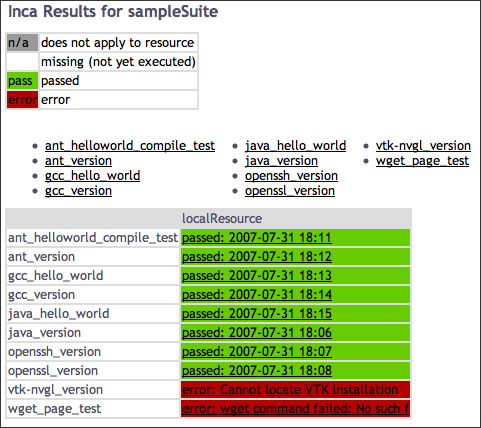

Select sampleSuite and defaultGrid and press the Submit button. You should see a page similar to the figure below with the reporter series scheduled to execute on localhost. The reporters test a small set of compiler, grid services, math library and scientific visualization packages. Each reporter may perform a software package version query (e.g., gcc) and/or unit test (e.g., java -- unit test name is java_hello_world). Most boxes should be have the ? icon indicating that the reports have not yet been received.

Refresh the page after a few minutes and you should begin to see more boxes filled in until it looks like the below figure. If you do not see a results filled in, check the .log files in $INCA_DIST/var for ERROR or Exception. See Section 13.1 for more information about logging.

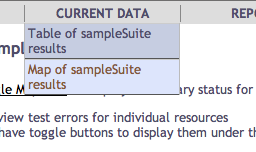

You can also navigate to this page from the "table of sampleSuite results" link under "Current Data" in the page header.

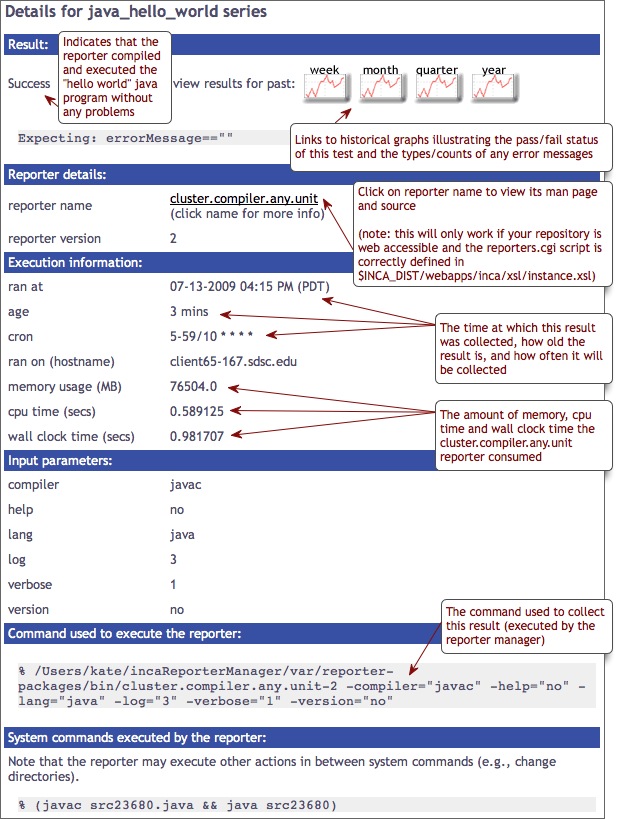

Click on any red/green box to see the details of how the result was collected. The figure below shows the details of the cluster.compiler.any.unit reporter that compiles and executes a small hello world java program.

View the Inca sample default configuration using the Inca GUI tool, incat (Inca Administration Tool). You can use incat to make changes to the default configuration (e.g., add a new resource to defaultGrid or add new tests to sampleSuite). See Section 5 for more information about using incat.

Use the following command to start incat:

% ./bin/inca start incat -A localhost:6323

You should now see the Java GUI window appear on your local machine. If you don't see Java GUI windows pop up like those in Figure 8 and Figure 9, it is probable that X-Window forwarding is not set up correctly between the machine where you installed Inca and your local machine. You can either configure X-Window forwarding, or you can start incat on a local machine as described in step 8.

Once incat has information from the agent, the following screen will display:THIS STEP IS OPTIONAL. If you don't see Java GUI windows pop up like those in Figure 8 and Figure 9, you can use this step to install incat on a local machine.

Copy the incaInstall.sh script to a local machine.

% wget http://inca.sdsc.edu/releases/2.6/incaInstall.sh

Install incat on a local machine:

% ./incaInstall.sh $INCA_DIST incat

Copy the incat key, certificate, and trusted directory from the original machine to your local machine:

% scp orig.machine:$ORIG_INCA_DIST/etc/incatkey.pem $INCA_DIST/etc/ % scp orig.machine:$ORIG_INCA_DIST/etc/incatcert.pem $INCA_DIST/etc/ % scp "orig.machine:$ORIG_INCA_DIST/etc/trusted/*" $INCA_DIST/etc/trusted/

Start the incat component on a local machine with the agent hostname from the original machine:

% cd $INCA_DIST; ./bin/inca start incat -A ORIGHOST:6323 &

5. Administering Inca with incat

Inca provides a graphical administration tool, named incat, that allows you to configure your deployment. Using incat, you can specify the repositories from which Inca should retrieve reporters, the hosts where you wish to run reporters, and which reporters you wish to run on each host.

You can connect incat to a running Inca Agent via the -A option, e.g., "cd $INCA_DIST; ./bin/inca start incat -A localhost:6323".

As mentioned in the Quick Start guide, you can execute "./bin/inca default" from the command line to install a default Inca configuration. The discussion below describes how you would use incat to specify the same configuration. Before continuing, use the inca script to start both a Depot and an Agent on your host.

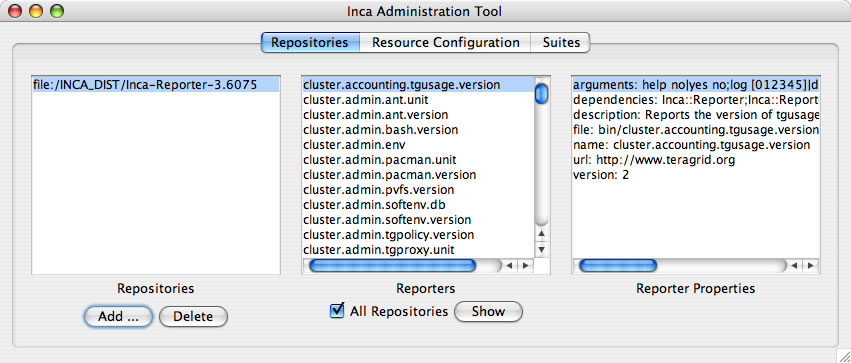

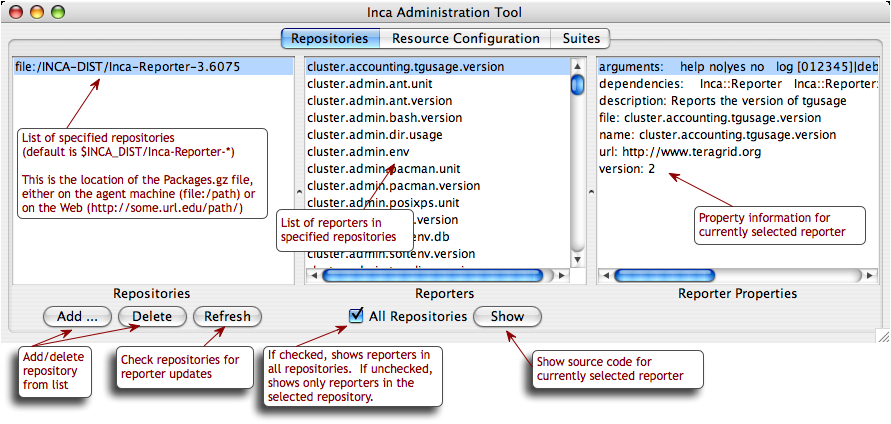

5.1. Repositories

Incat begins by showing the panel for Inca reporter repositories (as shown in Figure 10). The default Inca configuration retrieves reporters from the $INCA_DIST/Inca-Reporter-* repository on the agent machine. To add this repository to your list, press the Add button in the Repositories section, enter the file:/ location of the Packages.gz file in the pop-up window that appears, then press the OK button. Within a few seconds you should see the repository appear in the Repositories list, a set of reporters in the Reporters list, and properties for the first reporter in the Reporter Properties list. Reporter source code is viewable by double clicking on a reporter name or by selecting the reporter name and pressing the "Show" button.

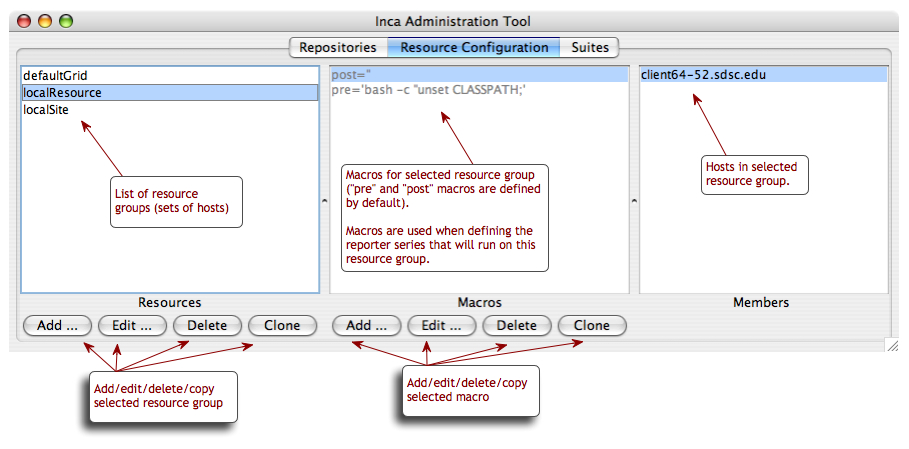

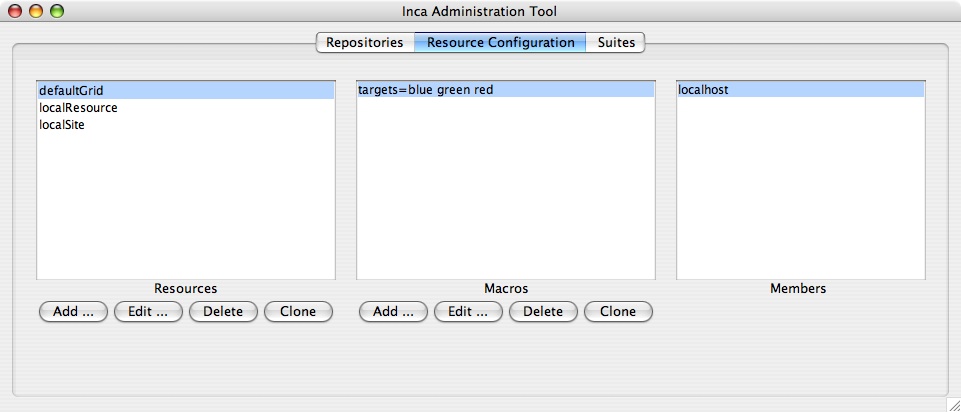

5.2. Resource Configuration

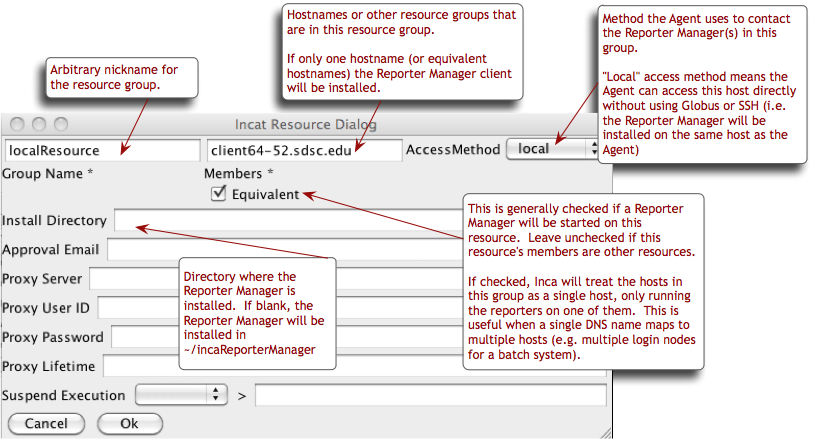

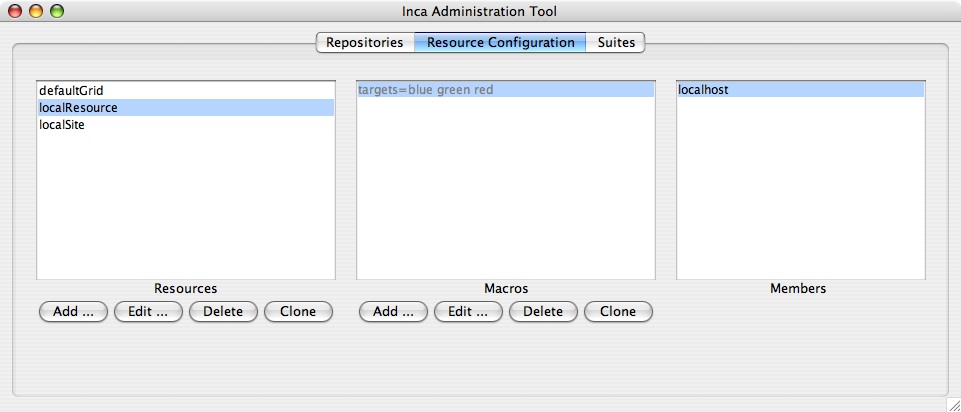

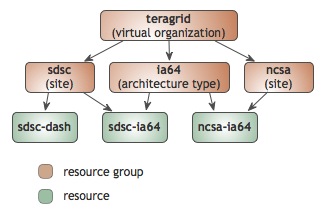

The default Inca configuration defines three resource groups -- a group called "localResource" that specifies the host where the Inca Agent will launch a new Reporter Manager (localhost) and two container groups called "localSite" (contains "localResource") and "defaultGrid" (contains "localSite") that can be extended to include any other hosts running Reporter Managers. The default resource configuration is shown is Figure 11.

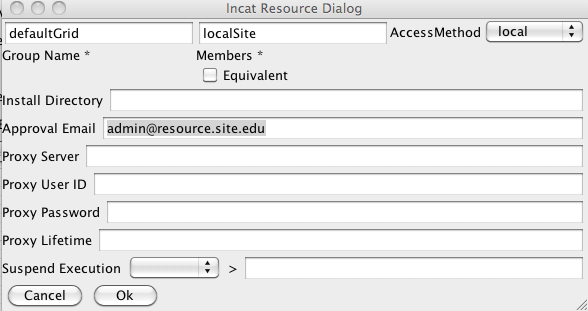

The configuration above can be duplicated in an empty Inca installation by pressing the Resource Configuration tab near the top of the incat window and then entering information about the hosts on which you want Inca to run reporters. To define the default groups above in incat, press the Add button in the Resource Groups section to open the resource group edit dialog shown in Figure 12.

In the Group Name text box, enter the name "localResource" as a nickname for the machine the Reporter Manager client runs on. Enter "localhost" in the Members text box and select "local" as the access method (for a description of access methods see Section 5.5). Press OK to complete entry of this resource group. Incat will close the resource group edit dialog and will display localResource in the Resource Groups section of the Resource Configuration panel.

Press the Add button again to add a second resource group. Give this one the name "defaultGrid" and enter "localResource" in the Members text box. This tells incat that any hosts in the "localResource" group (localhost, in this case) are also part of the defaultGrid group. If you defined other groups, "siteB", "siteC", etc., you could include these in defaultGrid by listing them in the Members text box, separated by spaces. Press OK to complete entry of this resource group. In the Resource Groups list, select each group and notice that localhost is listed in the Members panel.

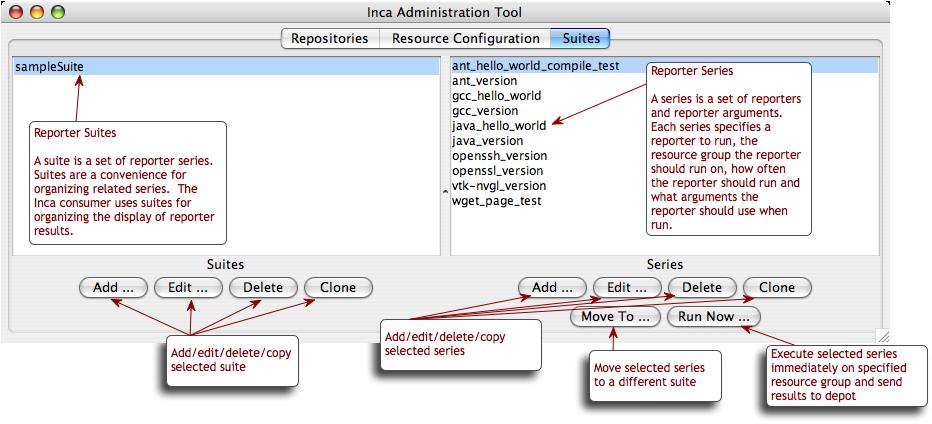

5.3. Suites

Pressing the Suites tab near the top of the incat window takes you to incat's suite/series specification panel. Here you specify the reporters you want to run, the resource groups to run them on, how frequently to run them, and the arguments to use when running them.

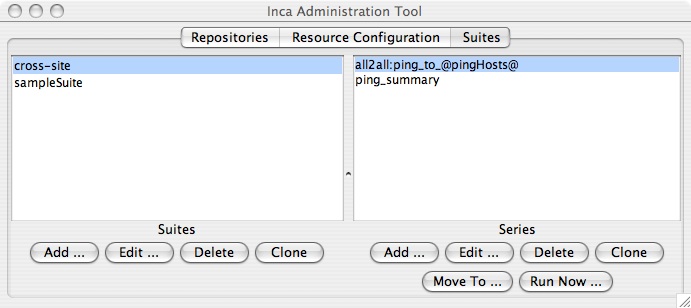

The default Inca configuration defines a single suite named sampleSuite that contains eight series as shown in Figure 13.

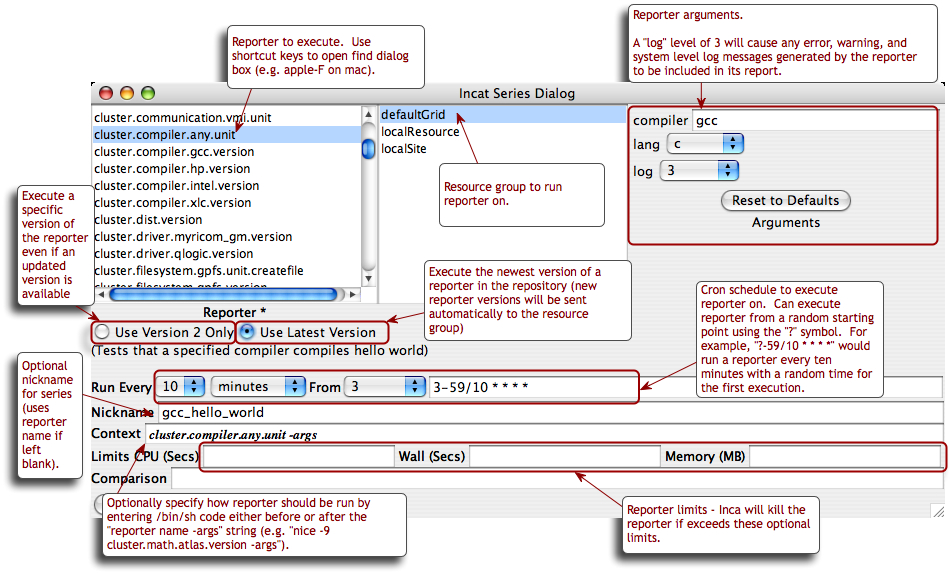

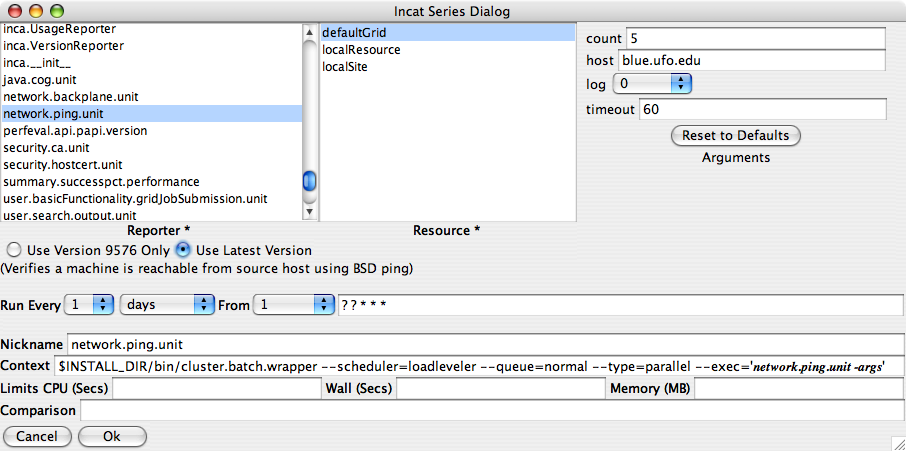

In a new Inca installation, you can add the default suite above by pressing Add button in the Suites section of the panel, entering "sampleSuite" in the pop-up window, then pressing the OK button. To add series to the new suite, press the Add button in the Series section to open the incat series dialog and configure each like the gcc_hello_world series in Figure 14.

The bottommost box in the series dialog allows you to test the output of the reporter and send email or take other actions if the test fails. This is covered in Section 5.10 below; for this series leave this box blank. Press the OK button, and incat will close the series dialog box and add the series to the Series list in the suite/series specification panel.

The other nine series in the default Inca configuration are composed similarly to the first one. Press the Add button in the series section for each of them, then set the values in the series dialog as specified in the table below. Set the log argument for each series to 3 and the frequency to 10 minutes.

Table 1. Default Configuration Series

Reporter | Nickname | Arguments |

|---|---|---|

cluster.admin.ant.unit | ant_helloworld_compile_test | |

cluster.admin.ant.version | ant_version | |

cluster.compiler.gcc.version | gcc_version | |

cluster.compiler.any.unit | java_hello_world | compiler: javac; lang: java |

cluster.java.sun.version | java_version | |

cluster.interactive_access.openssh.version | openssh_version | ssh: ssh |

cluster.security.openssl.version | openssl_version | |

viz.lib.vtk-nvgl.version | vtk-nvgl_version | |

grid.wget.unit | wget_page_test | page: http://cnn.com/index.html |

5.4. Committing/Saving

Your Inca deployment configuration is now complete. At this point, it's a good idea to use the "Save" option in incat's File menu to write the configuration to a file (Figure 15). That way, you have a local copy of the configuration that you can later modify. The file is formatted XML; if you're curious, you can read through it to see how incat represents the information you've entered.

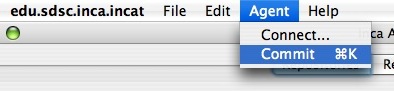

Although your configuration is complete, it's not yet active. To tell Inca to begin running reporters, you need to have incat send your configuration to your Inca Agent. If you started incat with the -A argument, then you're already connected to your Agent. Otherwise, use the "Connect" option in incat's Agent menu to establish a connection. Once you're connected, you can use the "Commit" option in the Agent menu to send the configuration to the Agent (Figure 16). In response, the Agent will install the Inca Reporter Manager code on the host specified in incat's "Resource Configuration" panel and begin running the reporters you specified in the "Suite Series" panel.

5.5. Resource Access Methods

The Inca Agent can use the following access methods to stage and start Reporter Managers on resources:

ssh:

The most common access method. The Agent starts a Reporter Manager on a remote machine using ssh to access the remote machine. The Agent must have ssh key access to the remote machine. Reporter Manager files are copied from the Agent to the remote machine using sftp. For ssh resource groups, incat provides text boxes for you to enter the login id, password, and path to the ssh key file on the Agent machine. For security purposes, incat displays asterisks for the password and encrypts it when you save the configuration to a file.

globus2:

The Agent starts a Reporter Manager on a remote machine using Globus PreWS to access the remote machine. Reporter Manager files are copied from the Agent to the remote machine using GridFTP. When you select globus2 in the Access Method pull-down, incat provides text boxes for you to enter contact information for the resource's Globus GRAM and GridFTP servers. If you leave these boxes blank, Inca defaults to ports 2119 and 2811, respectively, for the first host in the resource group's member list. Access to Globus hosts requires an active Globus proxy on the Agent's host. You can either create a manual proxy on the Agent machine before starting, or you can store a proxy on a myproxy server and complete the four incat proxy dialog boxes (see Section 5.7) so that the Agent can obtain one as needed.

globus4:

The Agent starts a Reporter Manager on a remote machine using Globus WS to access the remote machine. Reporter Manager files are copied from the Agent to the remote machine using GridFTP. When you select globus4 in the Access Method pull-down, incat provides text boxes for you to enter contact information for the resource's Globus WS GRAM and GridFTP servers. If you leave these boxes blank, Inca defaults to ports 8443 and 2811, respectively, for the first host in the resource group's member list. Access to Globus hosts requires an active Globus proxy on the Agent's host. You can either create a manual proxy on the Agent machine before starting, or you can store a proxy on a myproxy server and complete the four incat proxy dialog boxes (see Section 5.7) so that the Agent can obtain one as needed.

local:

The Agent starts a Reporter Manager on the same machine where the Agent is running (localhost).

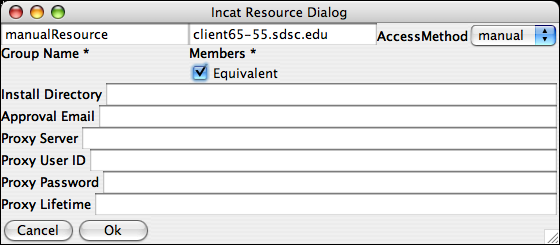

manual:

Entering a manual resource group indicates that you want complete, direct control over Inca execution on the group. For a manual resource group you must start the Reporter Manager on the command line and restart it any time you want to change the reporter series configuration for the group. The Agent will NOT automatically start a Reporter Manager for a manual resource as it will for local, ssh, or globus2 resources. See Section 11.6 for more details.

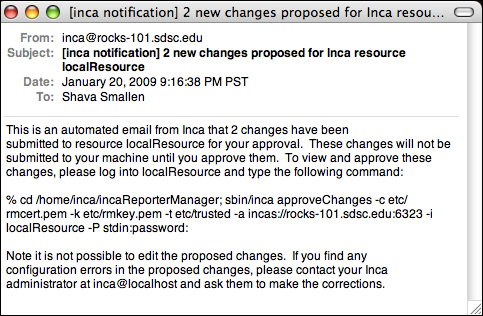

5.6. Requiring Resource Administrator Approval

By default, Inca installation changes take effect automatically once you commit them to the Agent. In circumstances where Inca changes must be approved before they take effect, enter the approver's email address in the "Approval Email" text box of the resource edit dialog. When changes to the Inca installation on this resource are committed, Inca will send approval instructions to the email specified in the resource dialog. Note that a run now request as described in Section 5.3 is not considered a change and will be forwarded automatically if that series has already been approved.

The emailed approval instructions will look like the image below by default and will describe how changes can be approved using Inca's approveChanges command. To customize the approval instruction email edit $INCA_DIST/etc/approveEmail.txt.

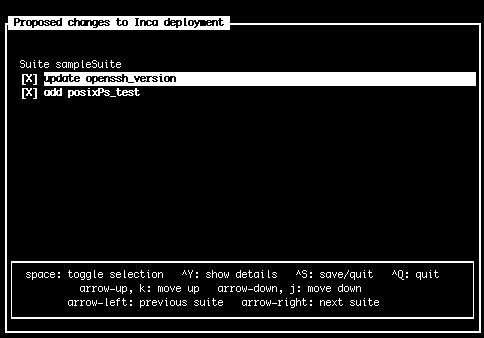

The approveChanges command allows viewing and approval of the individual changes committed using incat. Use the --help flag with the approveChanges command to see all possible options. After approveChanges is invoked, the approver will see a text menu like the one in the screen below with a list of proposed changes. More information about each change is viewable by hitting ctrl-y. By default all changes are checked. If any proposed changes are incorrect for their system, the approver should uncheck them by hitting space. After checking only correct changes for their resource, the approver should hit ctrl-s to approve the selected changes. The Agent will only transmit approved changes to the resource. The proposed changes not approved will remain in the queue and indicate that the Inca administrator has incorrect information about the resource. The approver should notify their Inca administrator of these errors to correct their proposed changes list.

The image below shows an example of more information about a change (viewable by hitting ctrl-y). In this example a change to the series logging is represented by the before and after values separated by the "=>" symbol and the green color highlighting the new log and context values. If colors aren't viewable in your terminal, try setting the TERM environment variable to "xterm-color".

Here the approveChanges command was invoked using the --color=blue,red,green flag to show unchanged attributes in blue, changed attributes in red and new values in green. Edit the $INCA_DIST/etc/approveEmail.txt file to add your own color preferences.

5.7. Proxies

For convenience, the Inca framework can be used to retrieve a proxy for the globus2 access method or for reporters that require an active proxy. Reporters that require a proxy should use the Inca::Reporter::GridProxy module described in Section 8.2.3.

Before configuring Inca to retrieve proxies, first store a proxy on a myproxy server. For information about setting up a myproxy server or storing proxies on a server, please see the official myproxy documentation.

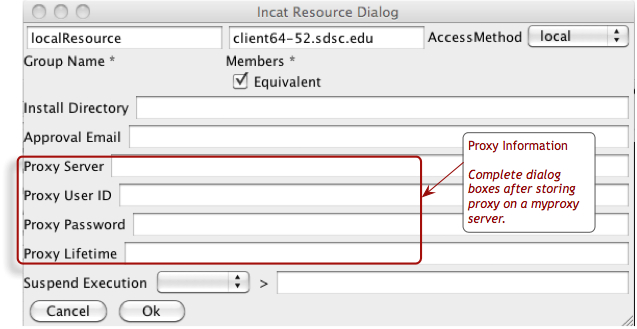

The Agent can automatically retrieve a proxy from a myproxy server if the proxy information is defined in incat as follows:

The dialog boxes are the hostname of the myproxy server, the username and password used to store the proxy, and the lifetime in hours that the agent should retrieve a proxy for (the default is 12 hours).

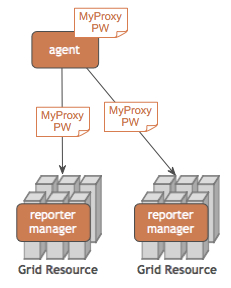

Once proxy information is committed to the agent, it can be retrieved by reporter managers. Each time a reporter manager is ready to run a reporter that needs a proxy it:

requests the MyProxy passphrase from the agent

uses the MyProxy command-line client to retrieve proxy credentials from a MyProxy server

clears the MyProxy passphrase from memory

The MyProxy passphrase is stored on the agent in the $INCA_DIST/var/resources.xml file and is encrypted with the same passphrase as the agent's private key. The MyProxy passphrase passes between the reporter manager and agent over their SSL connection.

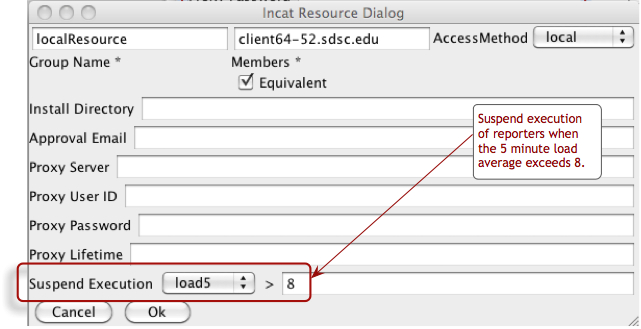

5.8. Suspend Execution

If you would like the reporter manager to suspend execution of reporters when a machine is under high load, you can specify a specific load value as shown in the figure below. When the reporter manager detects a load higher than the specified value, it will skip execution of the reporter and instead return a specific report indicating that the reporter was not executed due to high load. The consumer will then display it as a neutral value in the display of the reporter history. Specify the specific load average number of either 1, 5, or 15 minutes (load1, load5, or load15) and the load value as an integer.

5.9. Using Resource Macros

Resource macros provide a shorthand for defining multiple, similar series. For example, suppose you wanted to add three series to the configuration defined above to measure the ping time to three different hosts, named blue.ufo.edu, green.ufo.edu, and red.ufo.edu. One approach would be to define a series for blue, use the Clone button in the Series section of the Suites panel to make two copies, then modify them to ping green and red.

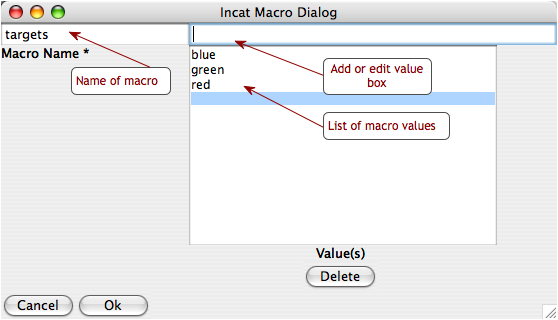

A better approach is to use a macro for the host names and let Inca replicate the series for you. In the Resource Configuration panel, click on defaultGrid in the Resource Group section. Next, press the Add button beneath the Macros section. This opens a dialog box that allows you to enter the name and value(s) of a macro associated with the current resource group.

Enter "targets" in the Macro Name text box and "blue" in the macro value edit box, then use the "Add" button or hit "enter/return" to add "green" and "red" as the second and third values of the macro. Afterwards, press OK. The definition of the targets macro now appears in the Macros section of the Resource Configuration panel. You may also edit values in the list by selecting them, changing the value in the edit value box, and hitting "enter/return". To delete macro values, select the value in the list and press the "Delete" button.

The targets macro is also defined for the other resource groups since the defaultGrid contains all other groups. As shown in Figure 17, macros appear grey if they were defined in a resource group other than the one selected. You can override an inherited macro value by selecting the macro in the Macros panel and pressing the Edit button to open the macro edit dialog. After you change the macro value and press OK, the updated macro definition will show in black in the Macros panel, indicating that the resource is no longer using the inherited value.

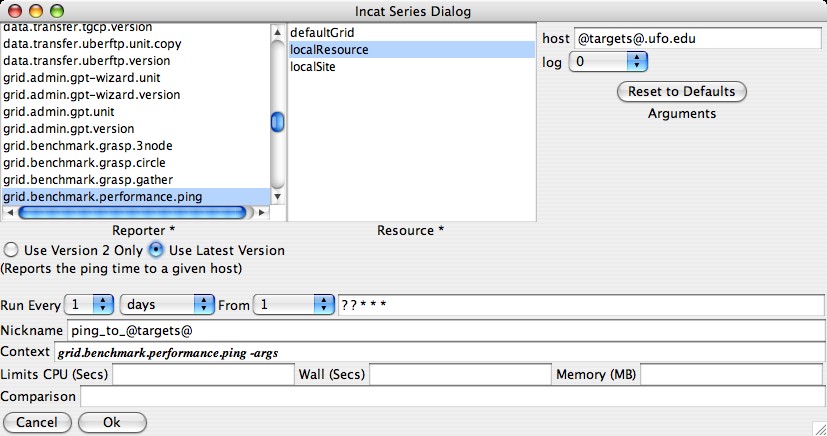

To make use of the macro you've defined, click the Suites tab, then press the Add button underneath the Series section to open the series edit dialog. In the dialog, set the reporter to grid.benchmark.performance.ping and the resource group to localResource.

In the host text box in the Arguments section of the dialog, enter "@targets@.ufo.edu". Macro references in incat are indicated by placing a "@" before and after the macro name. When the Inca Agent encounters a macro reference in a series, it makes one copy of the series for each value of the macro. Since the targets macro has three values--blue, green, and red--the Inca Agent will make three copies of this series, substituting a single value for the macro reference in each. In this case that means that you'll have one series with a host argument of "blue.ufo.edu", one with a host argument of "green.ufo.edu", and one with a host argument of "red.ufo.edu".

The inca web pages use the series nickname when displaying series results. If you leave the series nickname with its default value, the name of the reporter, then all three series will have the same nickname. Instead, you can enter "ping_to_@targets@" in the nickname text box. The Inca Agent will expand this reference in parallel with the reference in the host argument, so your three series will have the nicknames ping_to_blue, ping_to_green, and ping_to_red, respectively.

5.9.1. Using Macros from Other Resources

Sometimes it's useful to configure a series with macros from resource groups besides the one the test will execute on. For example, if a test will execute on a local resource to ping a group of remote resources, it would be helpful to use the macro for the hosts defined in the remote resource group. In the image below, we add a new group called "remoteGrid" to the default Inca configuration. The "remoteGrid" group contains two resources "remoteResourceA" and "remoteResourceB". Each of these two resources has a macro called "gramHost" defined with a GRAM gatekeeper hostname for that resource.

Now we set up a ping test that is scheduled to execute on the "localResource" group, but is configured using the "__incaHosts__" macro from the "remoteGrid" group. The only difference in syntax is to add the resource group name and an arrow to the macro name as in the image below. Since we're executing on "localResource", we have to add a "remoteGrid->" to the front of the macro name in order to get that group's macro values.

In this example we use the "__incaHosts__" macro, which is a special macro that is automatically created by Inca for each resource group and resolves to the leaf hostnames of each resource group. So the series configuration in the image below will create two ping tests to execute on "localResource": one that pings the hostname of "remoteResourceA" (sapa.sdsc.edu) and one that pings the hostname of "remoteResourceB" (cuzco.sdsc.edu). This is because we're getting all the values of the "remoteGrid" group's "__incaHosts__" macro, and the "remoteGrid" group contains "remoteResourceA" and "remoteResourceB".

We could also configure another series that is identical to the one shown in the image above except we replace the "__incaHosts__" macro with the "gramHost" macro. In this case, the two new ping tests will ping the "gramHost" hostname of "remoteResourceA" (gatekeeper.sapa.sdsc.edu) and the "gramHost" hostname of "remoteResourceB" (gatekeeper.cuzco.sdsc.edu).

5.9.2. Configuring "all2all" Series

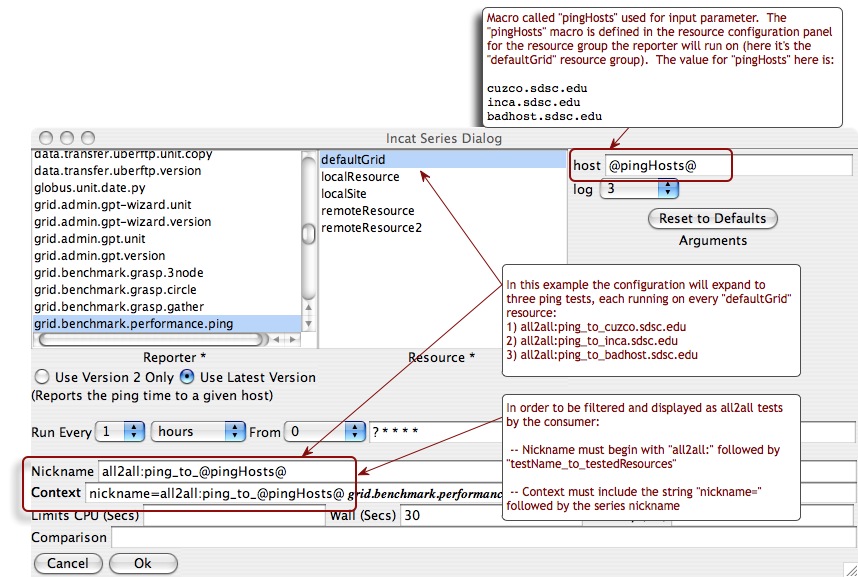

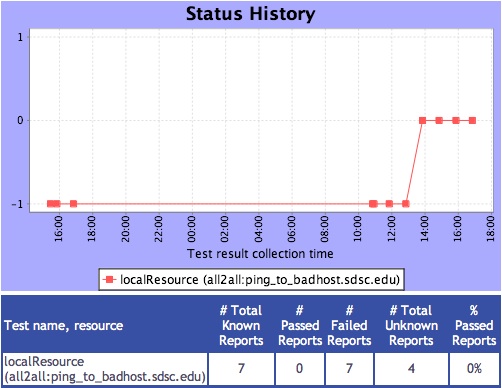

The "all2all" test results are calculated by the consumer. Generally "all2all" series are cross-site tests. The images below illustrates an example of configuring an "all2all" ping series.

First a new suite called "cross-site" is added to contain the series for our "all2all" tests:

Next we select the cross-site test that we'll execute (grid.benchmark.performance.ping) and configure the targets with a "pingHosts" macro. The consumer detects that this is an all2all series because the nickname and context contain all2all strings as described in the image below:

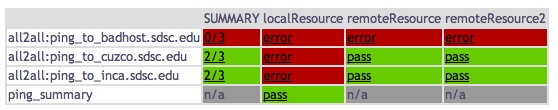

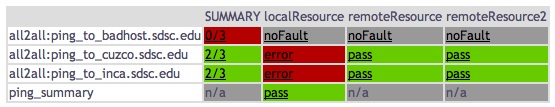

In addition to configuring the actual all2all tests, we also configure a summary series for the latest pass/fail results of each all2all test. This summary will be used by the All2AllFilter described in Section 11.3.2 to determine which resource is responsible for a failure. For example, in the example above we created an all2all ping test to be executed on the defaultGrid resource group. Each of defaultGrid resources is pinging three other resources: cuzco.sdsc.edu, inca.sdsc.edu and badhost.sdsc.edu. The summary series will find the latest results for each of the resources being pinged in order to assess whether a new failure is the fault of the resource doing the ping or the resource being pinged. For example, if two of the three resources in the defaultGrid group are unable to ping badhost.sdsc.edu, when the third resource in defaultGrid goes to ping badhost.sdsc.edu and fails we know that it's the fault of badhost.sdsc.edu and not the third resource (or subsequently any other resource until badhost.sdsc.edu is fixed). Based on the results of the summary series, the All2AllFilter would mark this third resource as "NOT_AT_FAULT" for failing to ping badhost.sdsc.edu.

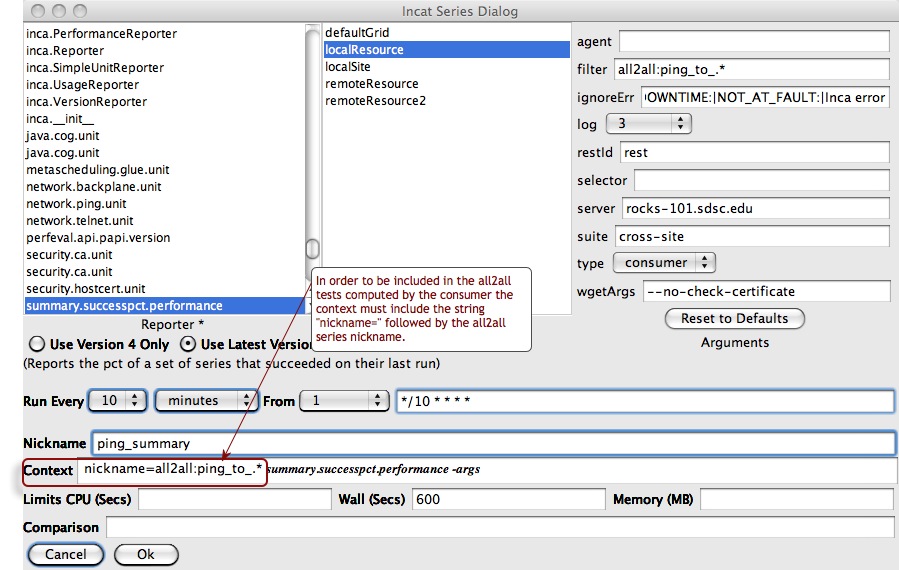

To configure a summary series for all2all tests, make a new series that will execute the "summary.successpct.performance" reporter with the following parameters:

"Context": contains a similar "nickname=all2all:testName_to_.*" string as the all2all tests being summarized. Note that ".*" replaces the "@pingHosts@" macro used above since we're only creating one series.

"filter": same as the Context string minus "nickname=". The reporter will get the latest results for all series whose nickname matches this string.

"ignoreErr": the regular expression that the summary reporter looks for in error strings when counting failures and if it finds it then the errors are marked neutrally.

"restId", "server" and "type": tell the reporter where to get the latest test results (in this example via the consumer at rocks-101.sdsc.edu using the "rest" URL).

"suite": the suite the all2all tests being summarized can be found in.

"wgetArgs": extra arguments to use when wget'ing the test results.

The results of the all2all tests configured above are shown in the following image. Note that while the single summary series produces all the boxes in the "SUMMARY" column, each of the other boxes represents a unique series.

After we add the All2AllFilter described in Section 11.3.2 the results also show when an individual resource is not at fault for the error. Error messages are prefixed with "NOT_AT_FAULT" and marked neutrally in stylesheets ("noFault") and history graphs:

5.10. Series Comparison and Notification

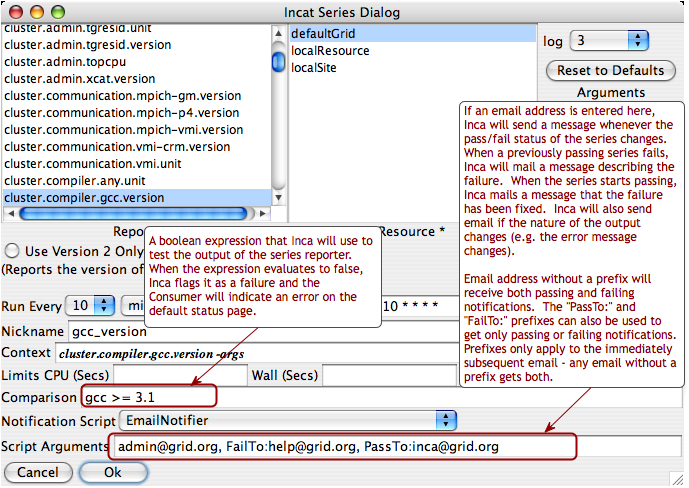

For a particular series, the Inca system by default reports only whether or not the series reporter was able to execute successfully--whether a version reporter was able to determine a package version, a unit reporter was able to run a program, etc. Using Inca's comparison and notification feature, you can refine a series to define success more precisely and to receive notification from Inca when a series reporter detects a problem. The bottom text boxes of the series edit dialog provide access to Inca's comparison and notification feature.

The comparison expression can test the content of the report body, the content of the report error message, or the value of any symbols defined in the report body by <ID> tags. The expression may use any of the boolean operators <, <=, >, >=, ==, and !=, plus perl's pattern match (=~) and mismatch (!~) operators. One simple test would be "body =~ /./", which would test whether the report body contained any characters. Tests can be joined together by the && and || operators. Using these, you could ignore an expected, minor error with the test "body =~ /./ || errorMessage == 'Try again later'".

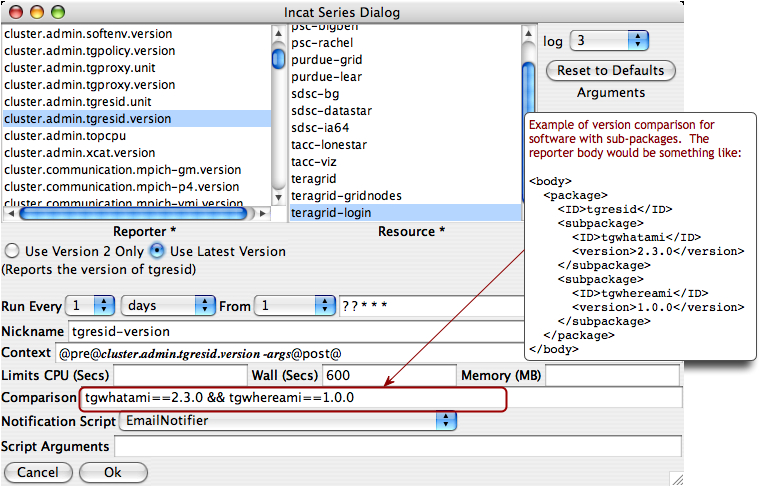

As mentioned above, you can include symbols defined in the report body in your tests. The Inca system uses the content of any subsequent tag as the symbol value. For example, the body of the output of the gcc version reporter might be

<body>

<package>

<ID>gcc</ID>

<version>3.1</version>

</package>

</body>

|

Here, Inca will use "3.1" as the value of the symbol "gcc". With this output, the comparison test "gcc >= 3.0" would succeed, while the comparison "gcc == 3.0" would fail. If the report body contains an <ID> tag with no subsequent tag, the value of the symbol is defined to be "".

The image below illustrates how a comparison for subpackage versions would be configured.

Inca supports the ability to run a script that notifies you of changes in a series comparison. When you specify a series comparison in the incat series dialog, two additional components become visible. The Notification Script pull-down menu allows you to select the script to run, and the Script Parameters text box allows you to enter parameters to pass to the script. By default, the Inca installation provides two notification scripts, EmailNotifier and LogNotifier. The first sends email to each address specified by the script parameters; the second writes a message to the specified log file. See Section 11.2 for directions on customizing the notifications you receive.

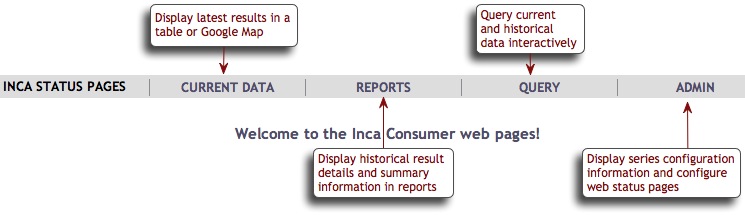

6. Data Display (Data Consumers)

The data that the Inca depot stores can be displayed in many ways, such as current status reports, historical graphs, and customized status information. An Inca data consumer is anything that retrieves data from the depot and displays it (e.g. a JSP, SQL query, CGI, etc.). The Inca depot provides access to stored data via Perl and Java client APIs (see Section 7.2.3).

The data consumer packaged with Inca is a collection of JavaServer Pages (JSP) and associated files. The consumer is installed in $INCA_DIST/webapps/inca and is deployed with Jetty when the consumer is started (e.g., ./bin/inca start consumer). The consumer listens on ports 8080 (HTTP) and 8443 (HTTPS) unless $INCA_DIST/etc/jetty.xml is edited as described in Section 6.12.

The default consumer's JSPs query the depot for XML results and either apply XSL to them in order to display HTML status pages or parse out data and display it in graphs. The figure below shows the default menu header. Each menu item invokes a JSP as described in the sections below.

6.1. Current Data

The default page header navigation contains links to tabular and map view result summaries for the sample suite. To add other current data pages under this heading (e.g., result summary tables for additional suites), customize the header.xsl file as described in Section 6.9.3.

6.1.1. Tabular Suite Summary View

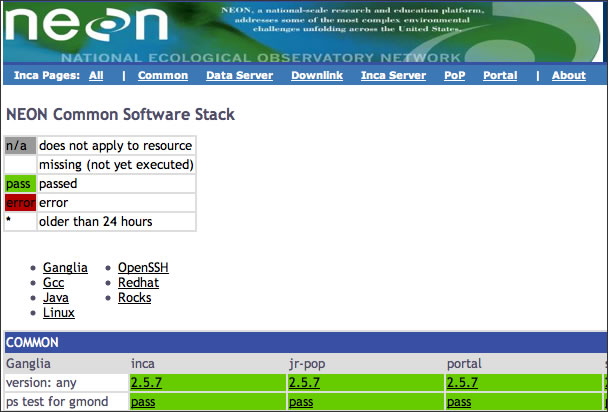

The first item in the CURRENT DATA menu executes status.jsp with default.xsl to create a summary table of suite results. This JSP takes comma-delimited lists in its suiteNames and optional resourceIds parameters that specify the suites and resources to be shown. The default installation invokes http://localhost:8080/inca/jsp/status.jsp?suiteNames=sampleSuite&resourceIds=defaultGrid, producing an image similar to this one:

6.1.2. Map View using Google Map API

6.1.2.1. Overview

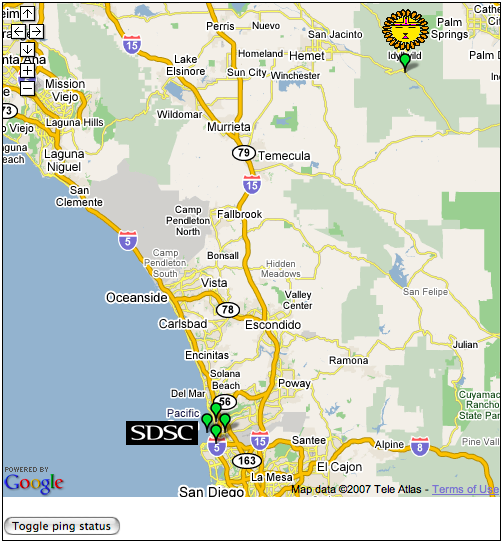

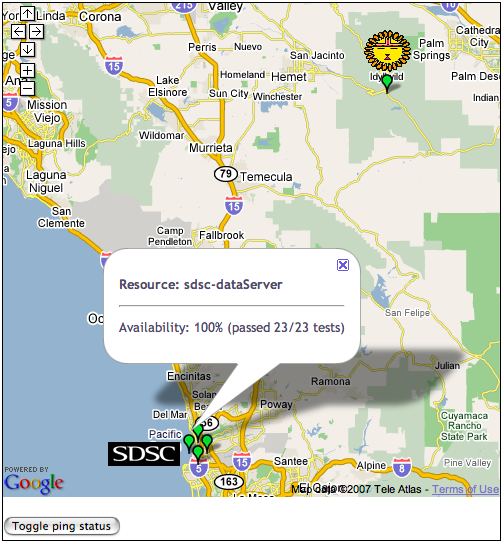

The second item in the CURRENT DATA menu executes status.jsp using google.xsl to display a map that provides a summary of the current status of resources. For each resource, the map view gives the percentage of reports passed, number of passed reports, number of failed reports, and a list of the failed tests with a link to each report details page. A resource is represented on the map as a marker and colored red, green, or orange based on the number of tests that have passed and/or failed. The figure below shows the Inca Google map view for the NEON testbed (four resources at SDSC and one resource at James Reserve). All resources are passing their tests, so every resource marker is green.

Clicking on a marker displays a pop-up with the name of the resource and its status information as show below.

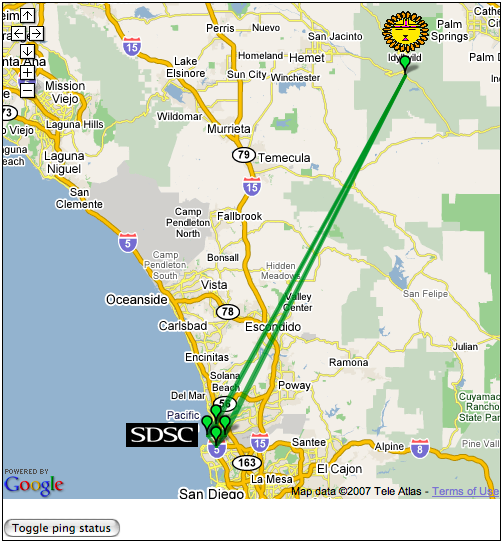

Clicking on the "Toggle ping status" button displays the status of the cross-site ping test as show below.

6.1.2.2. Setting up Google Map View

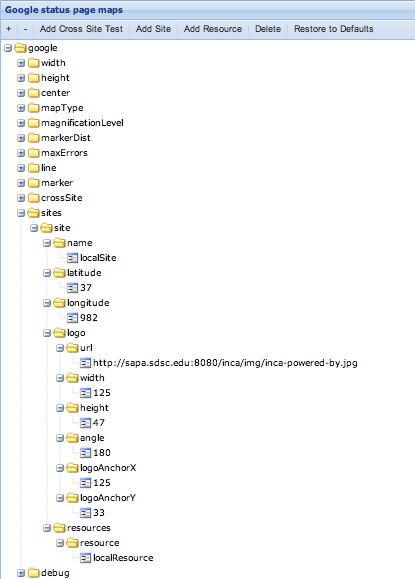

The Inca map views can be configured using the page described in Section 6.4.2. Use the options in Section 6.1.2.2.1 to configure maps for your site.

Next give the map generator the locations of your resources. Use the configuration page to add/edit sites and resource like the one below:

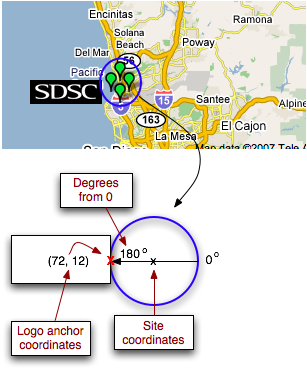

Provide a site name, latitude/longitude coordinates and a list of resources for each site. Optionally, you can also specify a logo for the site. Specify the height and width of the logo in pixels, angle from 0 degrees, and logo anchor coordinates (logoAnchorX/logoAnchorY) as shown in the figure below:

6.1.2.2.1. Google Map Options

width/height: The size of the map graphic (in pixels) that will be generated. By default the map is 800x500.

center: The center of the map in latitude/longitude coordinates. By default, the center of the map corresponds to the center of the U.S.

mapType: Type of map to display. By default the map is google.maps.MapTypeId.TERRAIN.

google.maps.MapTypeId.ROADMAP: displays the default road map view.

google.maps.MapTypeId.SATELLITE: displays Google Earth satellite images.

google.maps.MapTypeId.HYBRID: displays a mixture of normal and satellite views.

google.maps.MapTypeId.TERRAIN: displays a physical map based on terrain information.

magnificationLevel: The initial magnification, or zoom level, of the map expressed as a number between 1 and 12. Zoom level 1 displays the entire world, while zoom level 12 allows you to read street names.

markerDist: The distance between resource markers at a site. If there is more than one resource at a particular site, the resource markers will be arranged in a circle around the site center. By default, the distance between the markers will be determined so that the markers do not overlap each other.

maxErrors: The maximum number of errors to display in the info window that is displayed when a resource is clicked.

line: For cross-site tests, a line will be displayed between the two sites to indicate the test status. If a suite contains a cross-site test, a button will be displayed below the map named "Toggle <testName> status". When the button is clicked, the status of an individual cross-site test will be expressed as a line in between the source resource and the destination resource. The color of the line used to represent the test status can be customized. By default, green represents the test passed and red represents the test failed. See "crossSite" below to specify the tests you want displayed.

marker: Customize the look of the icon marker used to represent a resource on the map. A resource is represented by a Google marker and the color varies based on the number of tests that it failed: all tests passed =

,

at least one test failed =

,

at least one test failed =  ,

all tests failed =

,

all tests failed =  .

. Suppose you wanted to change the icons displayed to weather icons so that all tests passed =

,

at least one test failed =

,

at least one test failed =  ,

all tests failed =

,

all tests failed =  . You would

first find the size of the icons and modify the iconWidth and iconHeight (in this case the weather icons are 32x32 pixels).

Then pick the anchor point for the icon to be placed in relation to the resource's place on the map. Since we want the middle of the icon to be placed on the map, we choose the coordinates (16, 16) and modify iconAnchorCoord.

Next determine the anchor point for the info window to pop up relative to the icon. Since we want the info window to appear in the top middle, we choose the coordinates (16, 10) and modify iconInfoWindowAnchorCoord. Then construct the icon URLs

using the three weather icons located on the Google server at: http://maps.google.com/mapfiles/kml/pal4/icon33.png, http://maps.google.com/mapfiles/kml/pal4/icon34.png, http://maps.google.com/mapfiles/kml/pal4/icon36.png. Set the following values: iconUrlPrefix="http://maps.google.com/mapfiles/kml/pal4/icon", iconStatus->fail="36", iconStatus->pass="33", iconStatus->warn="34", iconUrlSuffix=".png".

Finally, specify the url for the shadow icon by setting shadowIconUrl="http://maps.google.com/mapfiles/kml/pal4/icon36s.png".

. You would

first find the size of the icons and modify the iconWidth and iconHeight (in this case the weather icons are 32x32 pixels).

Then pick the anchor point for the icon to be placed in relation to the resource's place on the map. Since we want the middle of the icon to be placed on the map, we choose the coordinates (16, 16) and modify iconAnchorCoord.

Next determine the anchor point for the info window to pop up relative to the icon. Since we want the info window to appear in the top middle, we choose the coordinates (16, 10) and modify iconInfoWindowAnchorCoord. Then construct the icon URLs

using the three weather icons located on the Google server at: http://maps.google.com/mapfiles/kml/pal4/icon33.png, http://maps.google.com/mapfiles/kml/pal4/icon34.png, http://maps.google.com/mapfiles/kml/pal4/icon36.png. Set the following values: iconUrlPrefix="http://maps.google.com/mapfiles/kml/pal4/icon", iconStatus->fail="36", iconStatus->pass="33", iconStatus->warn="34", iconUrlSuffix=".png".

Finally, specify the url for the shadow icon by setting shadowIconUrl="http://maps.google.com/mapfiles/kml/pal4/icon36s.png".iconWidth/iconHeight: size of icon in pixels.

iconAnchorCoord: the coordinates of point inside the icon to be used for the anchor.

iconInfoWindowAnchorCoord: where the anchor point for the info window should appear inside the icon.

iconUrlPrefix/iconStatus/iconUrlSuffix: set of 3 icons to indicate the different resource status: pass/fail/warn based on the number of tests the resource passes. The url for the 3 different images should have the same url pattern constructed by concatenating <iconUrlPrefix>, <iconStatus>/<fail|pass|warn>, and <iconUrlSuffix>.

shadowIconUrl: url of a icon to use for the shadow of the resource icon.

shadowIconWidth/shadowIconHeight: the size of the shadow icon in pixels.

crossSite: For each cross site test specified, a button will be displayed under the map to toggle status lines on and off the map. Each test has a name and a regex (regular expression to match the cross site test nicknames).

sites: The information about where to place resources in a site. Can have multiple logos and resources for a site. The logo angle/logoAnchorX/logoAnchorY are placement relative to the latitude/longitude. Angle is the degrees from the site center (latitude/longitude). For example, an angle of 0 will place the logo to the right of the resources, 180 to the left, 90 to the top, and 270 to the bottom. logoAnchorX and logoAnchorY are used to indicate the coordinates relative to the image that should be placed on the map. For example, if your image is 12x12, using logoAnchorX=6 and logoAnchorY=6 will place the image in the center.

debug: For development purposes. Will print out some log messages in a javascript window if greater than 0.

6.1.2.3. Accessing the Google Map View

Once setup is complete, load the map view by selecting the "Map of sampleSuite results" item of the CURRENT DATA menu in the navigation bar.

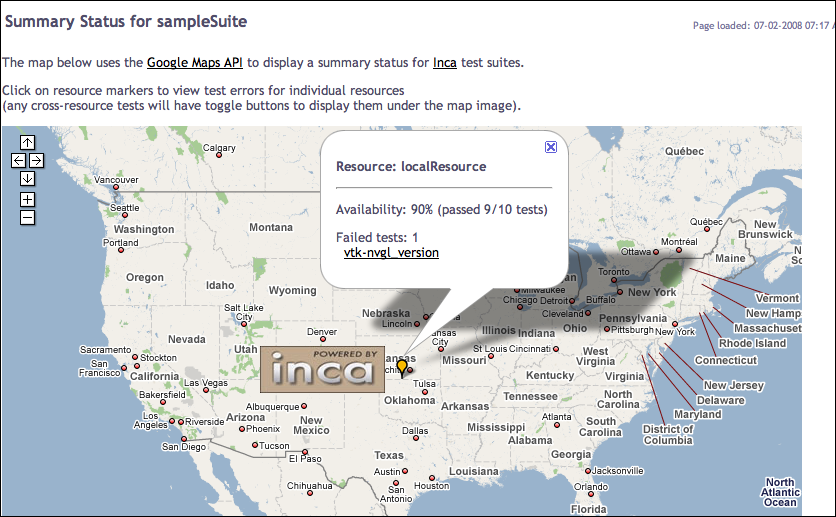

In the default installation, this links to http://localhost:8080/inca/jsp/status.jsp?xsl=google.xsl&xml=google.xml&suiteNames=sampleSuite&resourceIds=defaultGrid. This shows one resource and the Inca logo, similar to the map below. The resource status for multiple suites can be shown by editing header.xsl to provide comma-delimited lists for status.jsp's suiteNames and resourceIds parameters.

Clicking on the resource marker will display an info box as below. Clicking on the name of any failed test will take you to the reporter details page.

6.2. Reports

The items in the REPORTS menu display graphic reports of current Inca data and series histories. These graphs are generated using Cewolf, a JSP tag library for graphing based on JFreeChart. Some reports require the "incaQueryStatus" query to have run previously; information about queries can be found in Section 6.3.3.

6.2.1. Pass/Fail Graphs and Error Messages

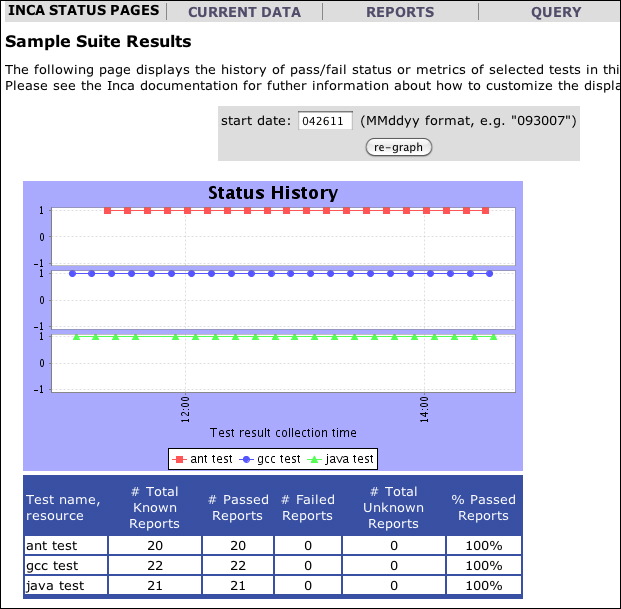

The first item in the REPORTS menu executes report.jsp, which uses CeWolf/JfreeChart, to generate historical summary reports with pass/fail status and error information.

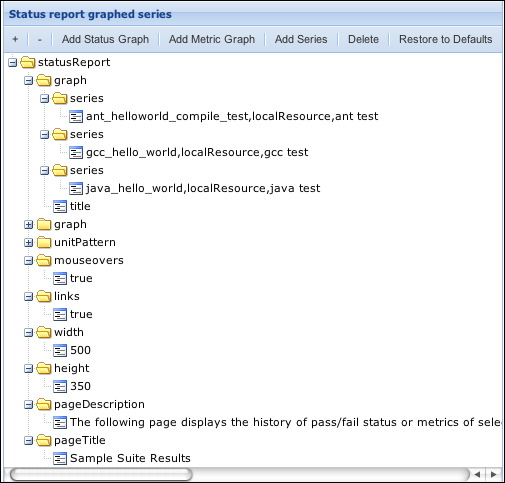

The graphed series can be customized using the page described in Section 6.4.2. Each "graph" folder represents a separate graph on the page and the series under it will appear in the graph. Series should be entered in the format "nickname,resource,label" and correspond to the names and resources of committed series. Each graph has its own title. The height and width of all the graphs can also be customized as well as the page description and title.

The default consumer groups different combinations of sampleSuite series and displays the past week of results. Each set of series has a title (e.g., Software Deployment Tests) for its grouping and the series nickname, resource name, and report label for each series in the group.

6.2.2. Metric Reports

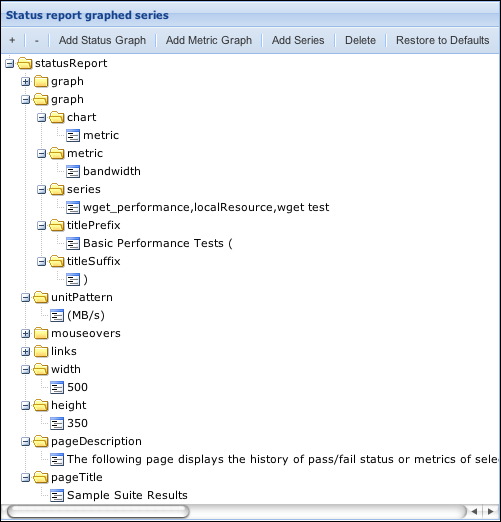

Like above, the first item in the REPORTS menu executes report.jsp, which uses CeWolf/JfreeChart, to also generate historical summary reports graphing one or more metrics.

The graphed series can be customized using the page described in Section 6.4.2. Each "graph" folder represents a separate graph on the page and the series under it will appear in the graph. Series should be entered in the format "nickname,resource,label" and correspond to the names and resources of committed series. The default consumer graphs a single metric called 'bandwidth' for the series "wget_performance,localResource,wget test". Additional metrics can be specified as a comma separated list, e.g., "EP-STREAM_Triad_GB_s,G-STREAM_Triad_GB_s,Wall_Mins".

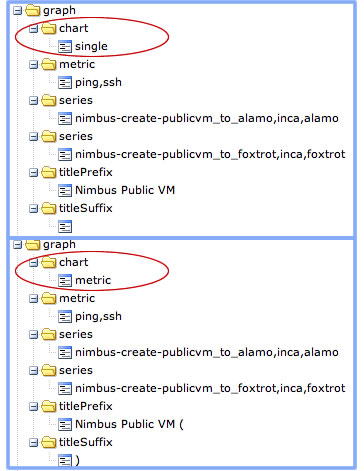

There are three different chart types that can be specified as defined below. By default, the chart type will be 'metric'.

metric: one graph containing all series will be printed per metric

series: one graph containing all metrics will be printed per series

single: all series and metrics will display on a single graph

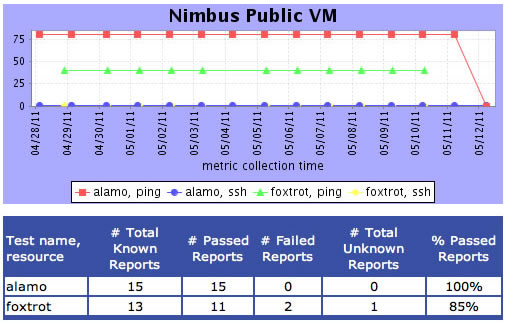

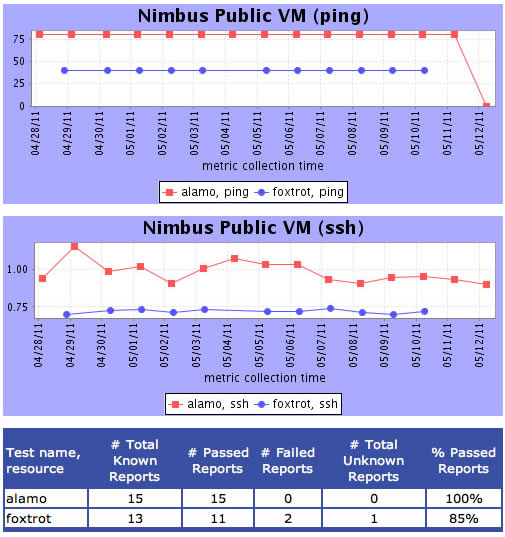

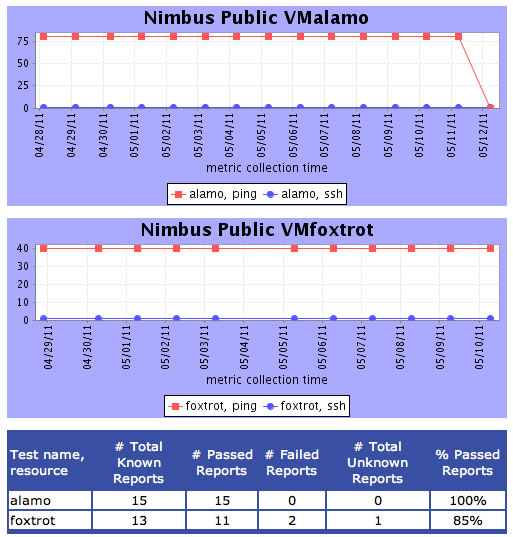

Below is the graph configuration for two series, one connecting to the alamo resource and the other to the foxtrot resource. Each series collects two metrics - ping and ssh. There are two graphs that are identically configured except that the first one uses the "single" chart type so that all series and metrics are graphed together, and the second uses the "metric" chart type so that the ssh and ping metrics are graphed separately.

The graphs created by the configuration above are shown below. The first graph uses the chart type "single" so we see four lines, one for each series and one for each metric:

The second configuration uses the chart type "metric" so there are two graphs, one for each metric. Each graph has two lines, one for each series:

If configuration used the chart type "series" there would be two graphs, one for each series. Each graph would have two lines, one for each metric:

6.2.3. Average Pass Rate by Resource/Suite

The second item in the REPORTS menu executes summary.jsp, which uses CeWolf/JfreeChart and stylesheets seriesAverages.xsl and periodAverages.xsl to show the average series pass rate by resource and suite for the past week. Each bar label shows the value of the average series pass rate for the last week and the difference in percentage from the previous week. The color is green if the average pass rate is better than the previous week, red if the average pass rate is worse, and gray if there was no change. Individual bars can be clicked on to show the percentages broken down further for each individual resource or suite.

Click on any bar to view a more detailed report for that resource or suite. For example, clicking on the bar for the 'app-support.teragrid.org-4.0.0' suite above shows the average pass rates for the 'app-support.teragrid.org-4.0.0' suite for each resource (figure below).

6.2.4. Series Error Summary

The third item in the REPORTS menu executes status.jsp with seriesSummary.xsl to summarize test series errors by time period. Each time period includes the number of errors for the series during the time period, the number of unique or distinct errors during the period, and the percentage of the total results that passed during the period.

The time periods and titles (i.e., PAST 4 WEEKS) can be customized using the page described in Section 6.4.2.

The change between the total number of errors in the most recent period and the total number of errors in the period before it is also given. If the number of errors in the most recent period is greater than the number of errors in the previous period (+), the number appears in red. If the number of errors in the most recent period is less than the number in the previous period (-), the number is green.

6.2.5. Average Pass History

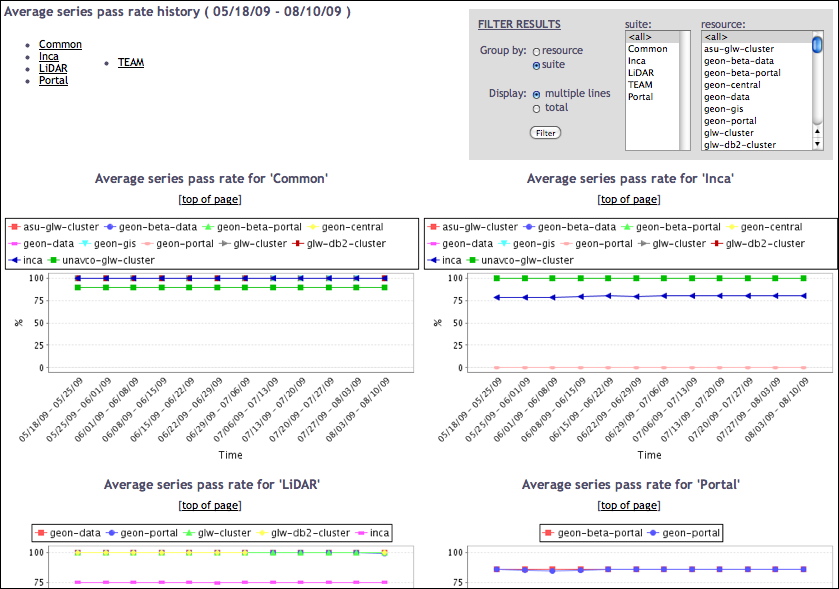

The fourth and fifth items in the REPORTS menu execute summaryHistory.jsp, which uses CeWolf/JfreeChart and stylesheets seriesAverages.xsl and periodAverages.xsl to show the average series pass rate over time grouped by resource and suite. I.e., When grouped by suite, a history graph summarizing the average series pass rate is displayed for each suite. By default, multiple lines are used to show the summary history on each resource. To view the total summary history percentages for all resources, click the total checkbox in the top right corner and click the 'Filter' button. Histories for either specific resources or suites can also be displayed thru the form in the top right corner. The amount of history shown is 4 weeks by default but can be modified by editing the 'incaQueryStatus' query parameters described in Section 6.3.3.

6.3. Query

The query pages allow users to generate graphs and cache queries to improve data display.

6.3.1. Create Graph

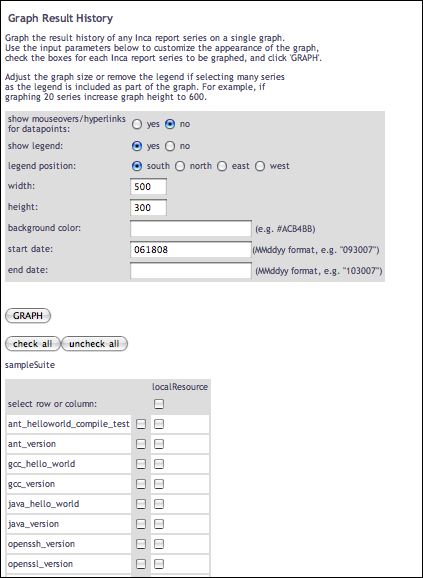

The first item of the QUERY menu executes status.jsp with graph.xsl to create a form that allows you to graph the history of more than one series. The form allows you select the set of series of interest via a set of checkboxes.

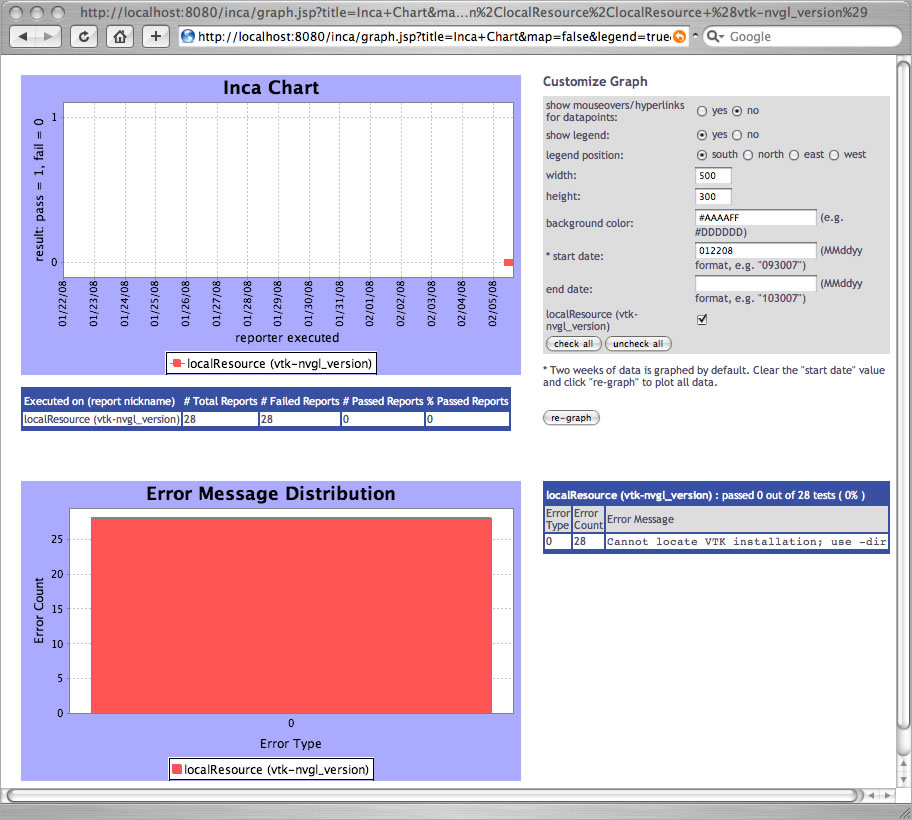

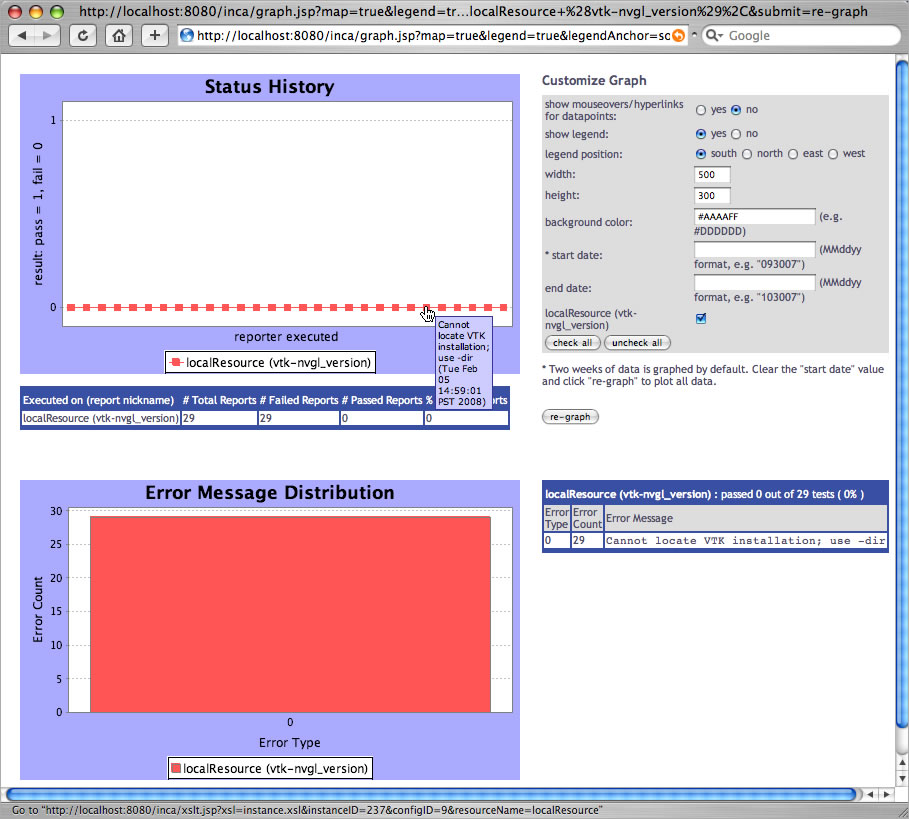

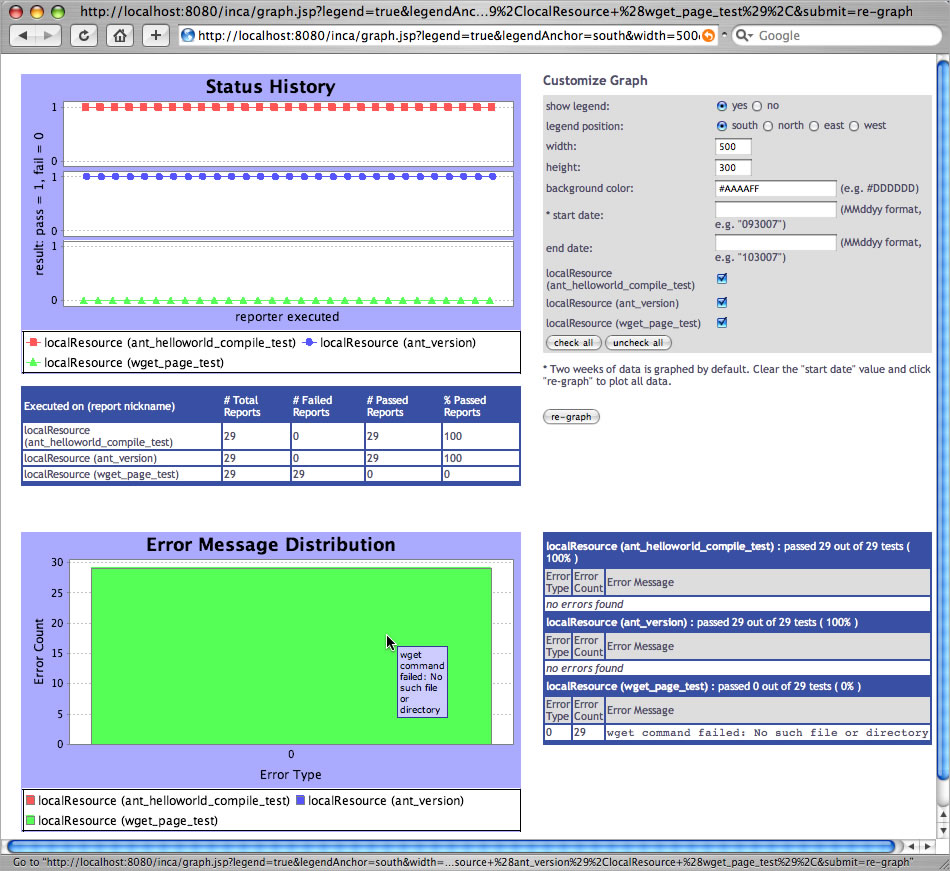

After selecting the "vtk-nvgl_version" series and clicking on the "graph" button in the form above, a graph page similar to the one below is shown with an XY plot of the pass/fail status of the selected series, a series pass/fail summary table, a bar graph of error message frequency (if the selected series have errors), a summary table of error messages, and a form to customize the graph further.

To query for all results, clear the "start date" field in the "customize graph" section and click "re-graph". To retrieve graphs more quickly, data point mouseover text and links to report details are turned off for the pass/fail graph. The data points can be made interactive by choosing "show mouseovers/hyperlinks" in the "customize graph" section and clicking on "re-graph". Clicking on interactive data points will lead to report details pages, mousing over them will show collection times and any error messages.

Multiple series can also be graphed together as in the image below. A table under each graph summarizes results for each series.

Graph pages for individual series are also linked from the report details pages.

6.3.2. Create Stored Query

The second item in the QUERY menu executes status.jsp with create-query.xsl to provide a form for creating a cached query. Unless password protection has been disabled as described in Section 6.15, selecting this item causes the consumer to prompt for an id and password. The consumer can cache frequently executed depot queries in order to improve data display speed. For example, the latest results for a suite can be cached in order to quickly display a suite status page. By default the latest results for the sampleSuite are cached every 2 minutes. Results are also cached each day for historical reports.

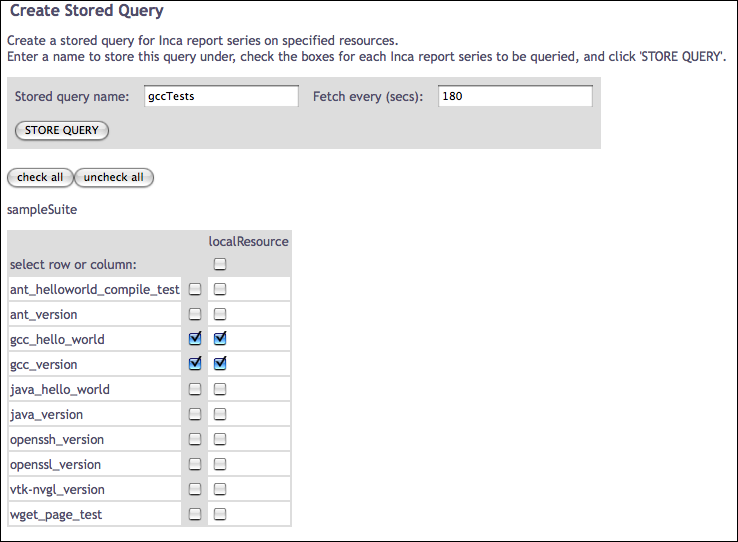

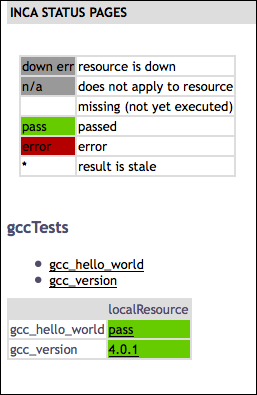

Cached queries can be used to display custom tables of latest results, using the queryNames parameter to status.jsp For example, the image below shows the creation of a cached query, named gccTests, for two series in the default installation.

Once this query has been stored and executed, the table shown below can be viewed via http://localhost:8080/inca/jsp/status.jsp?queryNames=gccTests&resourceIds=defaultGrid

6.3.3. Manage Stored Queries

The third item in the QUERY menu executes query.jsp to allow management of cached queries. Unless password protection has been disabled as described in Section 6.15, selecting this item causes the consumer to prompt for an id and password. Click on one of the pre-defined queries to see its parameters. Each query has a name, execution schedule, depot command it executes and the parameters for the depot command executed.

By default, the latest instances of all suites are cached every 2 minutes by predefined queries named incaQueryLatest+agentUri_suiteName. These cached queries are used for the CURRENT DATA menu views described in Section 6.1. Another default query is "incaQueryStatus" which retrieves a summary of the number of successes and failures for each series for the past 4 weeks. This cached query is used for the several of the reports described in Section 6.2. To display 10 weeks of history instead, modify "28" days to "70" and click "Change". Note that the consumer will automatically re-create any of these default queries that are deleted.

6.4. Admin

The ADMIN menu item offers two views of the running reporters in an Inca deployment and a configuration page. To link other informational pages under this heading, customize the header.xsl file as described in Section 6.9.3.

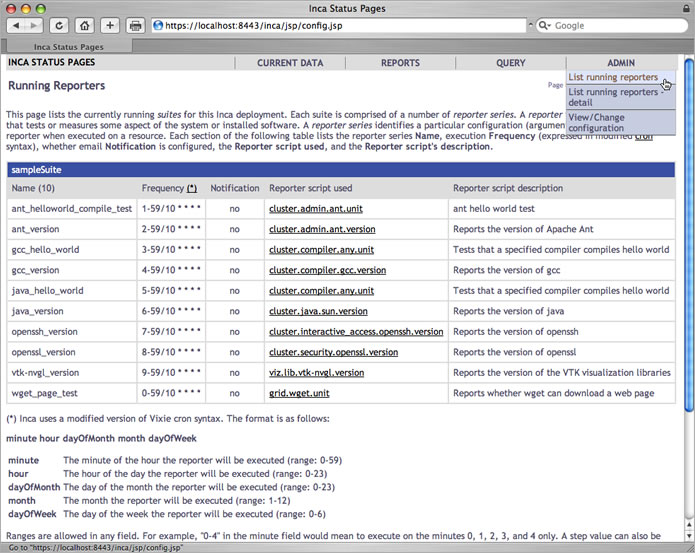

6.4.1. List Running Reporters

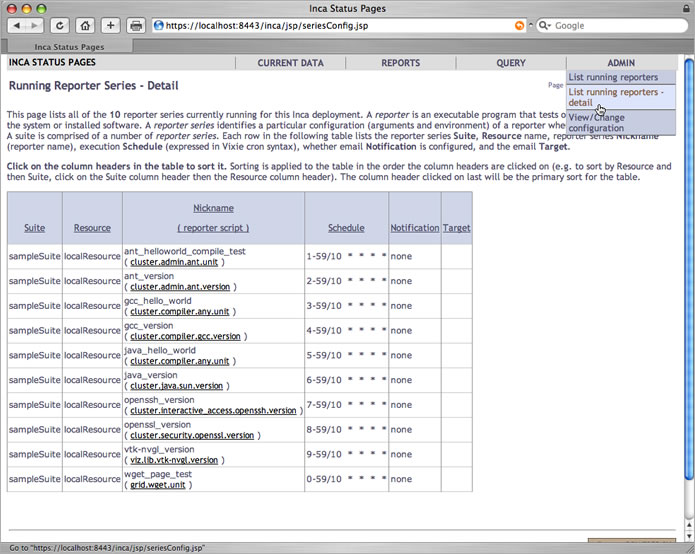

The "Running Reporters" page displays running reporters series by suite. Each series lists its name, frequency of execution, whether email notification is enabled, the reporter script used for the series, and a description of the reporter script. Note that in this view, and in the more detailed running reporters view, the reporter names link to a CGI script that will not work for repositories that are not web accessible (like the local default repository). Make your repository web accessible and add/commit the repository URL beginning with "http" in order to activate the reporter links.

The detailed "Running Reporter Series" page below lists the running reporter series and the suite each belongs to, the machine each is scheduled to execute on, whether email notification is configured, and the recipient ("Target") of any notification.

6.4.2. View/Change Configuration

Here Inca administrators can view and change the global configuration of status pages. Determine whether to allow run nows or the execution of tests via the detailed status pages (see Section 6.5.1) and configure the knowledge base, google map, series summary and status report status pages.

6.5. Report Details

The Current Data, Reports, and Query views described in previous sections provide links to a view that displays the individual report details as shown in the figure below.

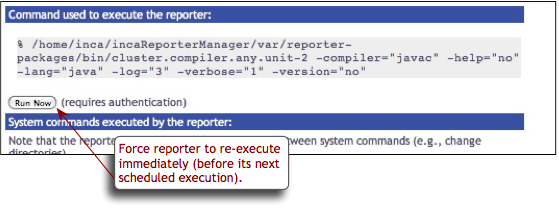

6.5.1. Run Nows

If the "Allow run nows from web pages" option is checked (see Section 6.4.2), a "Run Now" button will appear under the "Command used to execute the reporter" heading. This option is useful for system administrators who have fixed an issue and want to see the updated result or for those who want to verify that a problem has been resolved.

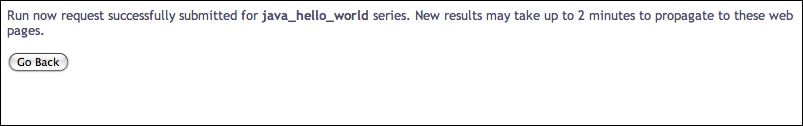

Clicking on the "Run Now" button triggers reporter execution on the local machine. Depending on the reporter execution time and page cache frequency (see Section 6.3.3), results may take a few minutes to propagate to the Inca web pages.

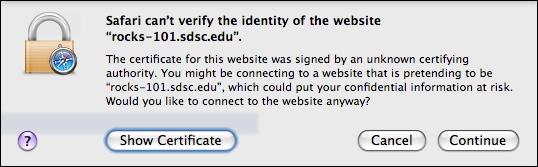

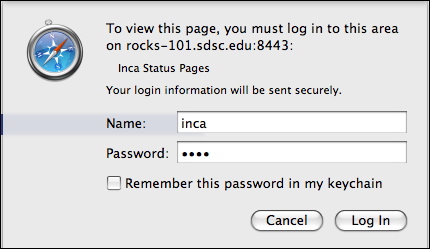

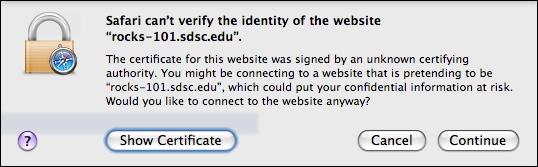

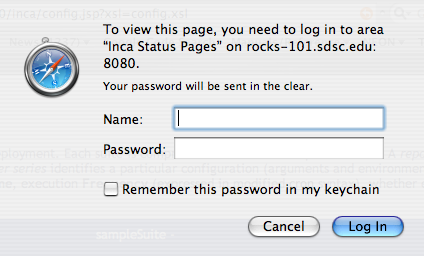

Since authentication is required based on the authentication setup (see Section 6.15), a box will popup such as follows:

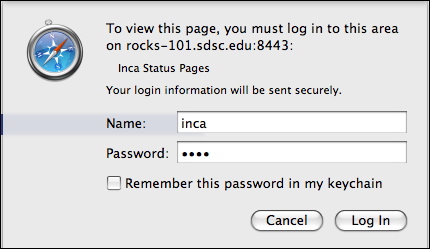

Press 'Continue' and the security login window will display such as follows:

Use the username and password specified in $INCA_DIST/etc/realm.properties. By default, the username and password are 'inca'. After the consumer submits the request, you will see a confirmation such as below:

6.5.2. Knowledge Base

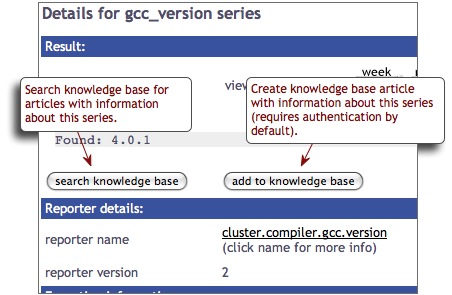

The Inca default knowledge base is designed to collect problem resolution information for tests. System administrators may use solutions stored in the knowledge base when debugging issues on their resources. The knowledge base can be configured using the admin page described in Section 6.4.2 with the options described after the configuration image below:

enable:

If the "enable" value is set to "true" then a "search knowledge base" button and an "add to knowledge base" button will appear under the "Result" heading of the reporter details status pages. If "enable" is set to "false" then the knowledge base buttons will be hidden and inaccessible.

searchString:

The URL that will be visited after clicking on the "search knowledge base" button. The macros "@nickname@", "@reporter@" and "@error@" will be replaced by the actual series nickname (e.g. gcc_version), reporter name (e.g. cluster.compiler.gcc.version), and error message for each test. The "+" character will be replaced by "&".

submitString:

The URL that will be visited after clicking on the "add to knowledge base" button. The macros "@nickname@", "@reporter@" and "@error@" will be replaced by the actual series nickname (e.g. gcc_version), reporter name (e.g. cluster.compiler.gcc.version), and error message for each test. The "+" character will be replaced by "&".

submitEmailNotification:

The email address to send new, changed, and removed knowledge base articles to. By default no email notifications are sent and the "submitEmailNotifications" value is set to "none". To add email notifications, change this value to an email address or a list of email addresses separated by commas (e.g. "email1@loc.edu, email2@loc.edu").

Once the knowledge base is enabled, two buttons to search and add to the knowledge base will appear on the report details pages:

Clicking on the "search knowledge base" button will lead to the URL set as the "searchString" value. By default, this returns all of the knowledge base articles related to the series:

Clicking on the "add to knowledge base" button will lead to the URL set as the "submitString" value. By default, this is a form for entering article information. Since authentication is required for this form based on the authentication setup (see Section 6.15), a box will popup such as follows:

Press 'Continue' and the security login window will display such as follows:

Use the username and password specified in $INCA_DIST/etc/realm.properties. By default, the username and password are 'inca'. After the consumer submits the request, a form will appear to complete like the one below:

6.5.2.1. Using an External or Custom Knowledge Base

To use an external or custom knowledge base instead of Inca's default internal knowledge base, configure the "searchString" and "submitString" parameters described in Section 6.5.2. For example, to add articles to an external knowledge base with a cgi submission form like

https://www.teragrid.org/cgi-bin/add-kb.cgi?articleText=text

change the "submitString" parameter to something like

https://www.teragrid.org/cgi-bin/add-kb.cgi?articleText=@nickname@%20@error@

Inca will replace the @nickname@ macro with the actual series nickname (e.g. gcc_version) and the @error@ macro with the series error message. The "%20" represents a space since Inca replaces the "+" character with "&". Therefore, if the external knowledge base had a cgi submission form like

https://www.teragrid.org/cgi-bin/add-kb.cgi?articleTitle=title&articleText=text

then the "submitString" parameter would be changed to something like

https://www.teragrid.org/cgi-bin/add-kb.cgi?articleTitle=@nickname@+articleText=@error@

The same syntax applies to the "searchString" parameter, which Inca uses to search for knowledge article and can be an external or custom URL.

6.6. Report Summaries

The Current Data and Query views described in previous sections display "latest" report summaries like the one shown in the figure below.

Report summaries are generated by a depot query that returns the XML described in Section 7.1.1.

6.7. Description of Data Consumer Files

The following table describes the main JSPs contained in the default consumer. The JSPs generally retrieve XML from depot and agent functions and apply XSL stylesheets to display HTML. If the "debug=1" parameter for a JSP is used, the JSP displays the XML. Parameters marked with an asterisk (*) are optional.

Table 2. Default Consumer JSP

Name | Purpose | Parameters | |

|---|---|---|---|

config.jsp

| Prints description of deployed suites and series. Linked at the bottom of index.jsp |

| |

error.jsp

| Prints Inca error message page. |

| |

graph.jsp

| Historical graphs of pass/fail status and error frequency. |

e.g. http://localhost:8080/inca/jsp/graph.jsp?series=ant_helloworld_compile_test,localResource | |

index.jsp

| Lists an installation's configured suites and resource names in an HTML form whose action is to display results for the selected suite and resource. The consumer initially redirects to this page. |

| |

instance.jsp

| Queries depot for report instance and invokes the specified xsl stylesheet on it. |

| |

query.jsp

| Page for managing stored queries in the Consumer. Queries can be added, deleted, changed, viewed or executed (and return XML). This jsp page manages the query manipulation via jsp tags and leaves the display of the current queries to a stylesheet. |

| |

report.jsp

| Summary report with graphs of pass/fail status and error frequency. |

| |

seriesConfig.jsp

| Prints detailed (expanded) information about running reporter series. Linked in ADMIN menu. |

| |

status.jsp

| Displays current results for a set of suites or cached queries. This page is the action of the index.jsp form to display suite results. |

e.g. http://localhost:8080/inca/jsp/status.jsp?suiteNames=sampleSuite&resourceIds=defaultGrid | |

summary.jsp

| Shows the average series pass rate by resource and by suite for a given time period. Uses seriesAverages.xsl and periodAverages.xsl to calculate statistics. | ||

summaryDetails.jsp

| Displays summary statistic details for resource or suite. Linked from summary.jsp. Uses seriesAverages.xsl and periodAverages.xsl to calculate statistics. |

e.g. http://localhost:8080/inca/jsp/summaryDetails.jsp?resource=localResource | |

summaryHistory.jsp

| Displays average series pass rate by resource or suite. Uses seriesAverages.xsl and periodAverages.xsl to calculate statistics. |

e.g. http://localhost:8080/inca/jsp/summaryHistory.jsp?groupBy=resource |

The default JSPs use the XSL stylesheets in $INCA_DIST/webapps/xsl to transform the XML into HTML. The following stylesheets are installed with the default consumer:

Table 3. Default Consumer XSL

Name | Applied To | Purpose |

|---|---|---|

config.xsl

| config.jsp | Prints description of deployed suites and series. |

create-query.xsl

| status.jsp | Prints form to select series and resources to query. |

default.xsl

| status.jsp | Prints table of suite(s) results. |

error.xsl

| error.jsp | Displays JSP error message and usage information. |

footer.xsl

| (included in most other xsl files) | Prints HTML page footer with the Inca logo. |

google.xsl

| status.jsp | Prints google map summary of current data. |

graph.xsl

| status.jsp | Prints form to select series to graph. |

header.xsl

| (included in most other xsl files) | Prints HTML page header. |

inca-common.xsl | (included in most other stylesheets) | Common templates for use in Inca stylesheets. |

index.xsl

| index.jsp | Lists all configured suite and resource names in an HTML form whose action is to display results for the selected suite and resource. |

instance.xsl

| instance.jsp | Prints HTML table with report details. |

legend.xsl

| (included in default.xsl and swStack.xsl) | Prints a key to cell colors and text. |

periodAverages.xsl

| summary.jsp, summaryDetails.jsp, summaryHistory.jsp | Computes pass percentage for suites and resources for a given period. |

query.xsl

| query.jsp | Creates form to manipulate hql queries. |

seriesAverages.xsl

| summary.jsp, summaryDetails.jsp, summaryHistory.jsp | Computes series pass percentages. |

seriesSummary.xsl

| status.jsp | Print out a table of stats for individual series. |

swStack.xsl

| status.jsp | Prints table of suite(s) results. Uses XML file to format table rows by software categories and packages. |

6.8. REST URLs

Inca provides the ability to fetch suite or stored query data in XML or HTML format using REST URLs. By default, the consumer recognizes a REST URL using the following format:

http://localhost:8080/inca/XML|HTML/rest/<suiteName>|<queryName>[/<resourceId>[/<seriesNickname>[/<timestamp>|week|month|quarter|year]]]

Table 4. Examples of Inca REST URLs

REST URL (Equivalent URL shown under REST URL) | Returns |

|---|---|

http://localhost:8080/inca/HTML/rest/sampleSuite equivalent to: http://localhost:8080/inca/jsp/status.jsp?suiteNames=sampleSuite | An HTML table of latest results for the specified suite (or query). Generated by applying an XSL stylesheet to an array of report summaries.

|

http://localhost:8080/inca/HTML/rest/sampleSuite/defaultGrid equivalent to: http://localhost:8080/inca/jsp/status.jsp?suiteNames=sampleSuite&resourceIds=defaultGrid | An HTML table of latest results for the specified suite and resource. Generated by applying an XSL stylesheet to an array of report summaries.

|

http://localhost:8080/inca/HTML/rest/sampleSuite/localResource/ant_version equivalent to: http://localhost:8080/inca/jsp/instance.jsp?nickname=ant_version&resource=localResource&collected=2010-06-14T12:52:00.000-07:00 (collected param would vary) | An HTML table of the *latest* report details for the specified suite, resource and series.

|

equivalent to: | An HTML table of the report details for the specified suite, resource, series and timestamp.

|

http://localhost:8080/inca/HTML/rest/sampleSuite/localResource/ant_version/week equivalent to: http://localhost:8080/inca/jsp/graph.jsp?series=ant_helloworld_compile_test,localResource&startDate=060710 (startDate param would vary) | Graph of weekly historical results the specified suite, resource and series (also available for month, quarter or year). Generated by graphing an array of graph instances.

|

If you want to fetch the data in XML, just replace HTML as below:

http://localhost:8080/inca/XML/rest/sampleSuite/localResource

If you would like to change the id 'rest' to a more transparent id such as 'kit-status-v1', edit <context-param> in $INCA_DIST/webapps/inca/WEB-INF/web.xml and restart the consumer. For example, change

<context-param>

<param-name>restId</param-name>

<param-value>rest</param-value>

</context-param>

|

to

<context-param>

<param-name>restId</param-name>

<param-value>kit-status-v1</param-value>

</context-param>

|

6.9. Changing the Look

6.9.1. Colors and Fonts

Properties such as colors and fonts are controlled by the default CSS (Cascading Style Sheet) file $INCA_DIST/webapps/inca/css/inca.css. The drop down nav bar in the header is controlled by $INCA_DIST/webapps/inca/css/nav.css. You can edit these files to customize the consumer display. For example, to change the color in the reporter detail pages header bar, edit inca.css and change lines 111-112 to:

.header {

background-color: #D07651; |

For general information, visit the [ CSS tutorial ].

6.9.2. HTML Layout

To modify the default HTML layout, edit the XSL stylesheet that is being applied to the JSP or create a new stylesheet in $INCA_DIST/webapps/inca/xsl and pass it in the JSP "xsl" parameter. The default JSP and XSL files are described in Section 6.7.

For general information about editing stylesheets, visit the [ XSL tutorial ].

6.9.2.1. Custom Values in HTML Table Cells

To display report values other than the default text of either a software version, "pass", or "error" on the suite results pages, edit default.xsl.

For example, to change the default suite status to print the time successful reporters ran and a truncated error message for failures like:

Edit default.xsl, add a new variable for the custom table cell text and print the custom cell text rather than the default text:

188 <xsl:variable name="cellText">

189 <xsl:choose>

190 <xsl:when test="string($instance)=''">

191 <xsl:value-of select="''" />

192 </xsl:when>

193 <xsl:when test="string($result/body)!=''

194 and string($result/errorMessage)=''

195 and ($comparitor='Success' or count($comparitor)=0)">

196 passed:

197 <!-- get yyyy-mm-dd from gmt timestamp -->

198 <xsl:value-of select="substring($result/gmt, 1, 10)" />

199 <!-- get HH:MM from gmt timestamp -->

200 <xsl:value-of select="substring($result/gmt, 12, 5)" />

201 </xsl:when>

202 <xsl:otherwise>

203 error:

204 <xsl:value-of select="substring($result/errorMessage, 1, 30)" />

205 </xsl:otherwise>

206 </xsl:choose>

207 </xsl:variable>

208 <xsl:choose>

209 <xsl:when test="$exit!=''">

210 <td class="{$exit}">

211 <a href="{$href}"><xsl:value-of select="$cellText"/></a>

212 <xsl:if test="$url[matches(., 'markOld')]"> |

6.9.3. Custom Page Header

The default page header is generated by the inclusion of the header.xsl file in other xsl files. The header is a navigation bar with drop down links to a set of default status pages:

The header's navigation bar is an HTML unordered list that is formatted with the nav.css stylesheet. To add or remove links in the navigation bar, open header.xsl and change the appropriate link. For example, to show a table of suite results for a new suite called "newSuite" on a new resource called "newResource":

57 <li><h2>Current Data</h2> 58 <ul> 59 <li> 60 <a href="'status.jsp?xsl=default.xsl&suiteNames=newSuite&resourceIds=newResource'"> 61 table of newSuite results</a> 62 </li> |